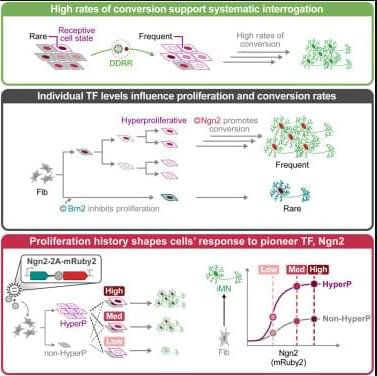

Using a systems and synthetic biology approach to study the molecular determinants of conversion, Wang et al. find that proliferation history and TF levels drive cell fate in direct conversion to motor neurons.

Researchers at the University of Adelaide have performed the first imaging of embryos using cameras designed for quantum measurements.

The University’s Center of Light for Life academics investigated how to best use ultrasensitive camera technology, including the latest generation of cameras that can count individual packets of light energy at each pixel, for life sciences.

Center director Professor Kishan Dholakia said the sensitive detection of these packets of light energy, termed photons, is vitally important for capturing biological processes in their natural state—allowing researchers to illuminate live cells with gentle doses of light.

Sulfate-reducing bacteria break down a large proportion of the organic carbon in the oxygen-free zones of Earth, and in the seabed in particular. Among these important microbes, the Desulfobacteraceae family of bacteria stands out because its members are able to break down a wide variety of compounds—including some that are poorly degradable—to their end product, carbon dioxide (CO2).

A team of researchers led by Dr. Lars Wöhlbrand and Prof. Dr. Ralf Rabus from the University of Oldenburg, Germany, has investigated the role of these microbes in detail and published the findings of their comprehensive study in the journal Science Advances.

The team reports that the bacteria are distributed across the globe and possess a complex metabolism that displays modular features. All the studied strains possess the same central metabolic architecture for harvesting energy, for example.

Melanized fungi although dangerous to human biology actually are remarkable because they adapted to the radiation which could give more clues to how humans could evolve to survive radiation exposure long term.

There’s an organism thriving within the Chernobyl disaster zone that is not only enduring some of the harshest living conditions imaginable, but potentially helping to improve them too.

The fallout from the Chernobyl nuclear disaster in 1986 is still fascinating the scientific community nearly 40 years on, with new developments emerging all the time.

The Chernobyl Exclusion Zone in Ukraine features a level of radiation that is six times the legal limit of human exposure for workers at 11.28 millirem – but there is still a living organism that has adapted to live and thrive there.

In an amazing achievement akin to adding solar panels to your body, a northeast sea slug sucks raw materials from algae to provide its lifetime supply of solar-powered energy, according to a study by Rutgers University–New Brunswick and other scientists.

“It’s a remarkable feat because it’s highly unusual for an animal to behave like a plant and survive solely on photosynthesis,” said Debashish Bhattacharya, senior author of the study and distinguished professor in the Department of Biochemistry and Microbiology at Rutgers–New Brunswick. “The broader implication is in the field of artificial photosynthesis. That is, if we can figure out how the slug maintains stolen, isolated plastids to fix carbon without the plant nucleus, then maybe we can also harness isolated plastids for eternity as green machines to create bioproducts or energy. The existing paradigm is that to make green energy, we need the plant or alga to run the photosynthetic organelle, but the slug shows us that this does not have to be the case.”

The sea slug Elysia chlorotica, a mollusk that can grow to more than two inches long, has been found in the intertidal zone between Nova Scotia, Canada, and Martha’s Vineyard, Massachusetts, as well as in Florida. Juvenile sea slugs eat the nontoxic brown alga Vaucheria litorea and become photosynthetic – or solar-powered – after stealing millions of algal plastids, which are like tiny solar panels, and storing them in their gut lining, according to the study published online in the journal Molecular Biology and Evolution.

Based on a material view and reductionism, science has achieved great success. These cognitive paradigms treat the external as an objective existence and ignore internal consciousness. However, this cognitive paradigm, which we take for granted, has also led to some dilemmas related to consciousness in biology and physics. Together, these phenomena reveal the interaction and inseparable side of matter and consciousness (or body and mind) rather than the absolute opposition. However, a material view that describes matter and consciousness in opposition cannot explain the underlying principle, which causes a gap in interpretation. For example, consciousness is believed to be the key to influencing wave function collapse (reality), but there is a lack of a scientific model to study how this happens. In this study, we reveal that the theory of scientific cognition exhibits a paradigm shift in terms of perception. This tendency implies that reconciling the relationship between matter and consciousness requires an abstract theoretical model that is not based on physical forms. We propose that the holistic cognitive paradigm offers a potential solution to reconcile the dilemmas and can be scientifically proven. In contrast to the material view, the holistic cognitive paradigm is based on the objective contradictory nature of perception rather than the external physical characteristics. This cognitive paradigm relies on perception and experience (not observation) and summarizes all existence into two abstract contradictory perceptual states (Yin-Yang). Matter and consciousness can be seen as two different states of perception, unified in perception rather than in opposition. This abstract perspective offers a distinction from the material view, which is also the key to falsification, and the occurrence of an event is inseparable from the irrational state of the observer’s conscious perception. Alternatively, from the material view, the event is random and has nothing to do with perception. We hope that this study can provide some new enlightenment for the scientific coordination of the opposing relationship between matter and consciousness.

Keywords: contradiction; free energy principle; hard problem of consciousness; holistic philosophy; perception; quantum mechanics; reductionism.

Copyright © 2022 Chen and Chen.

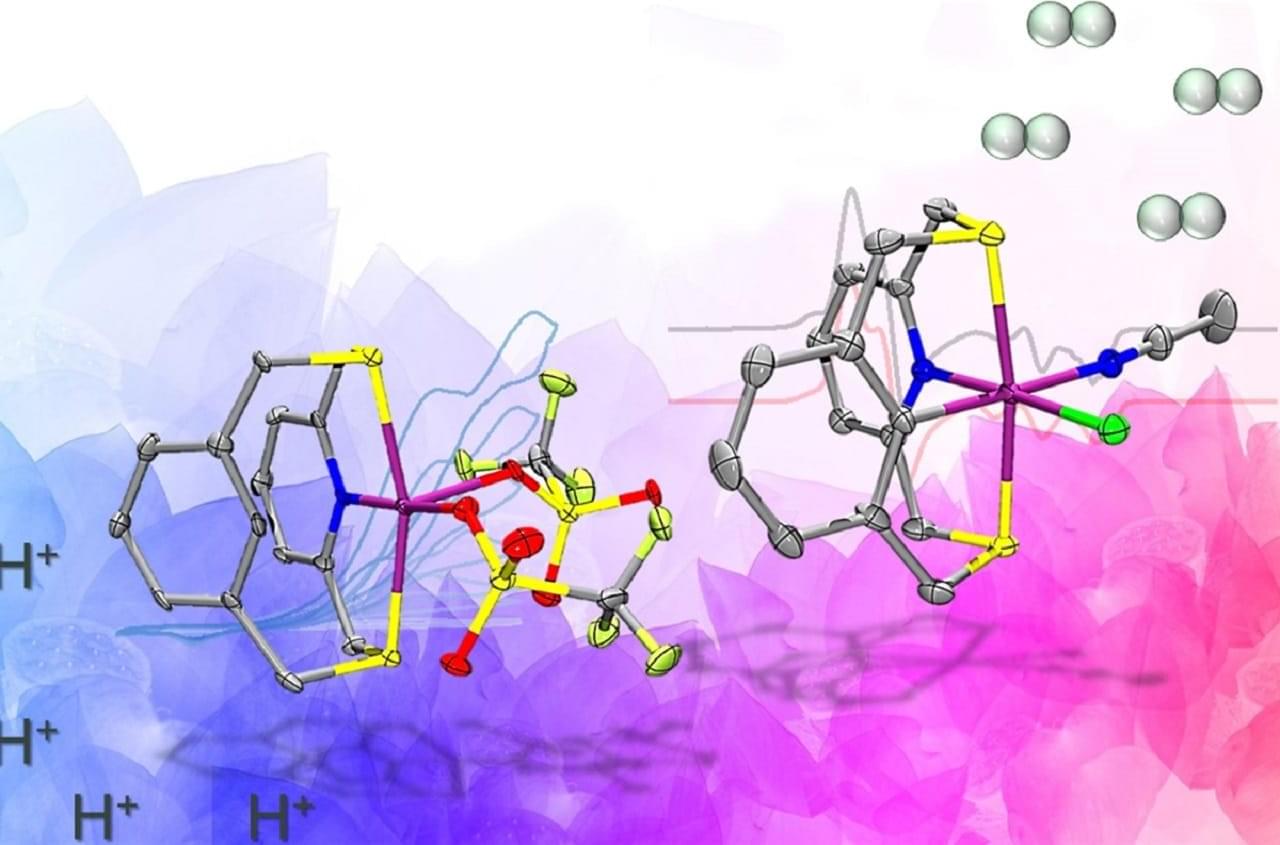

In a new study, EPFL scientists visualized the intricate interplay between electron dynamics and solvent polarization in this process. This is a significant step in understanding a critical process of many chemical phenomena, and it might be the first step to improving energy conversion technologies.

CTTS is like a dance of microbes where one electron from a dissolved material (like salt) emerges and becomes part of the water. This produces a “hydrated” electron, essential for several watery processes, including those necessary for life. Comprehending CTTS is crucial to understanding the motion of electrons in solutions.

In a recent study, EPFL researchers Jinggang Lan, Majed Chergui, and Alfredo Pasquarello examined the complex interactions between electrons and their solvent surroundings. The work was mostly done at EPFL, with Jinggang Lan’s final contributions made while he was a postdoctoral fellow at the Simons Center for Computational Physical Chemistry at New York University.

What do albatrosses searching for food, stock market fluctuations, and the dispersal patterns of seeds in the wind have in common?

They all exhibit a type of movement pattern called Lévy walk, which is characterized by a flurry of short, localized movements interspersed with occasional, long leaps. For living organisms, this is an optimal strategy for balancing the exploitation of nearby resources with the exploration of new opportunities when the distribution of resources is sparse and unknown.

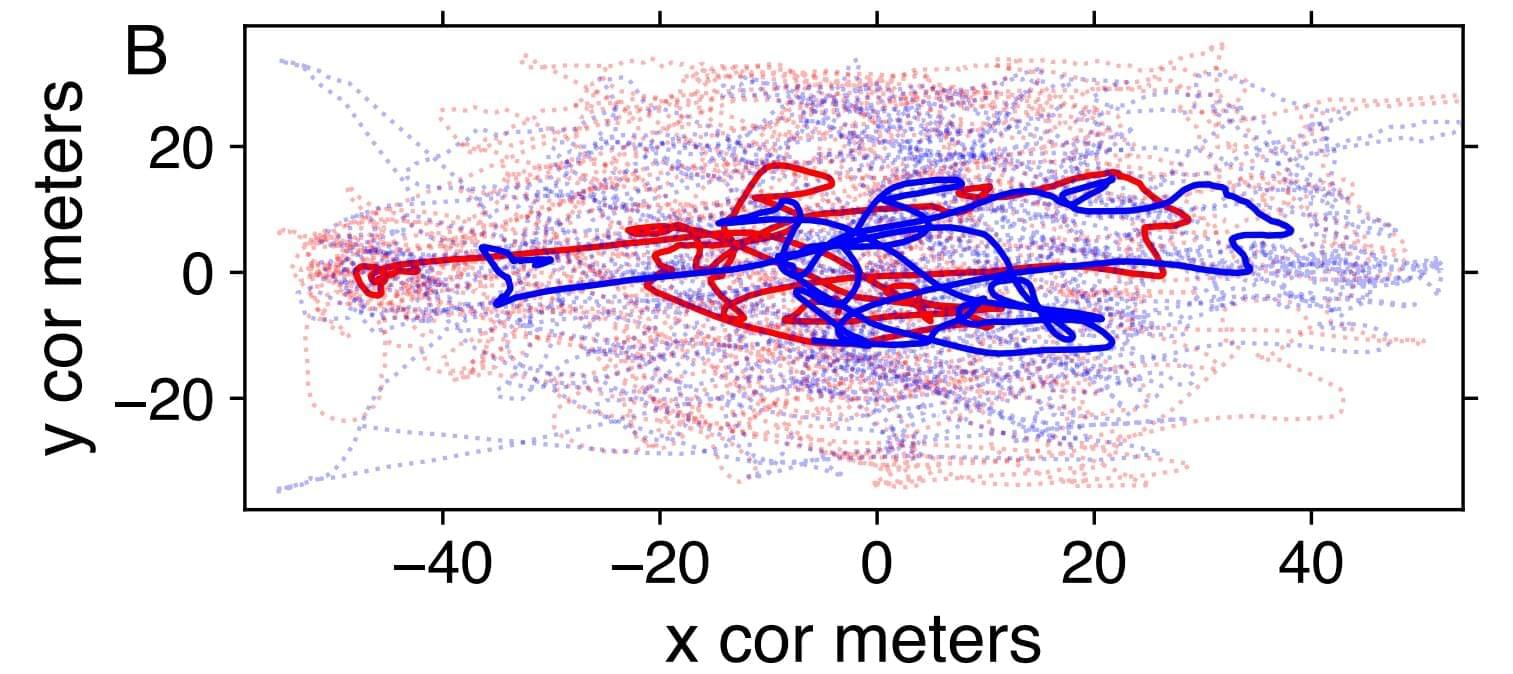

Originally described in the context of particles drifting through liquid, Lévy walk has been found to accurately describe a very wide range of phenomena, from cold atom dynamics to swarming bacteria. And now, a study published in Complexity has for the first time found Lévy walk in the movements of competing groups of organisms: soccer teams.

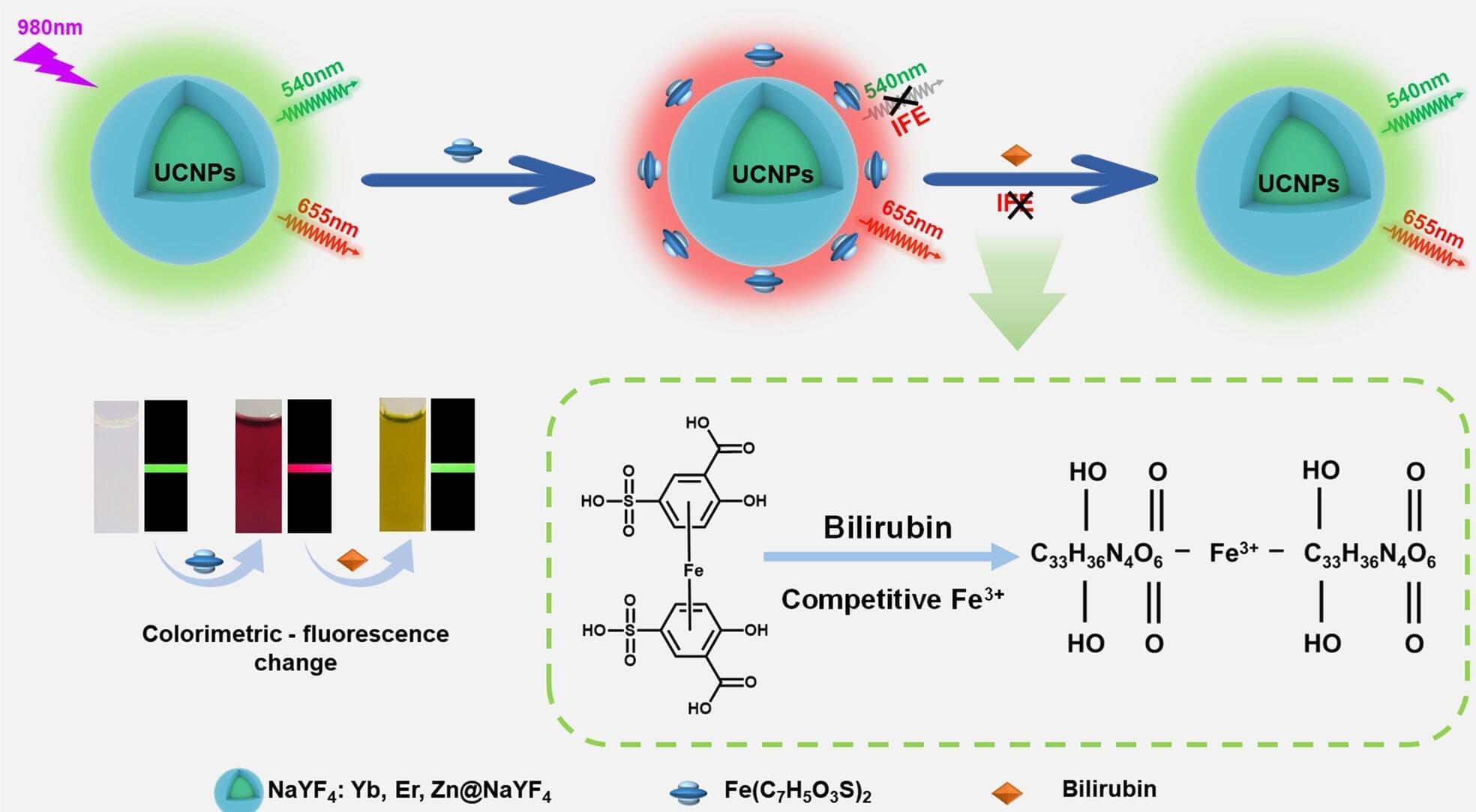

A research team led by Prof. Jiang Changlong from the Hefei Institutes of Physical Science of the Chinese Academy of Sciences has developed an innovative dual-mode sensing platform using upconversion nanoparticles (UCNPs). This platform integrates fluorescence and colorimetric methods, offering a highly sensitive and low-detection-limit solution for bilirubin detection in complex biological samples.

The findings, published in Analytical Chemistry, offer a new technological approach for the early diagnosis of jaundice.

Jaundice is a critical health issue in neonates, affecting 60% of newborns and contributing to early neonatal mortality. Elevated free bilirubin levels indicate jaundice, with healthy levels ranging from 1.7 μM to 10.2 μM in healthy individuals. Concentrations below 32 μM typically don’t show classic symptoms. Rapid and accurate detection of bilirubin in neonates is critical.