The benefits of exercise and its positive influence on physical and mental health are well documented, but a new Yale and VA Connecticut study sheds light on the role genetics plays for physical activity, accounting for some of the differences between individuals and showing differences in biology for physical activity at leisure versus physical activity at work and at home.

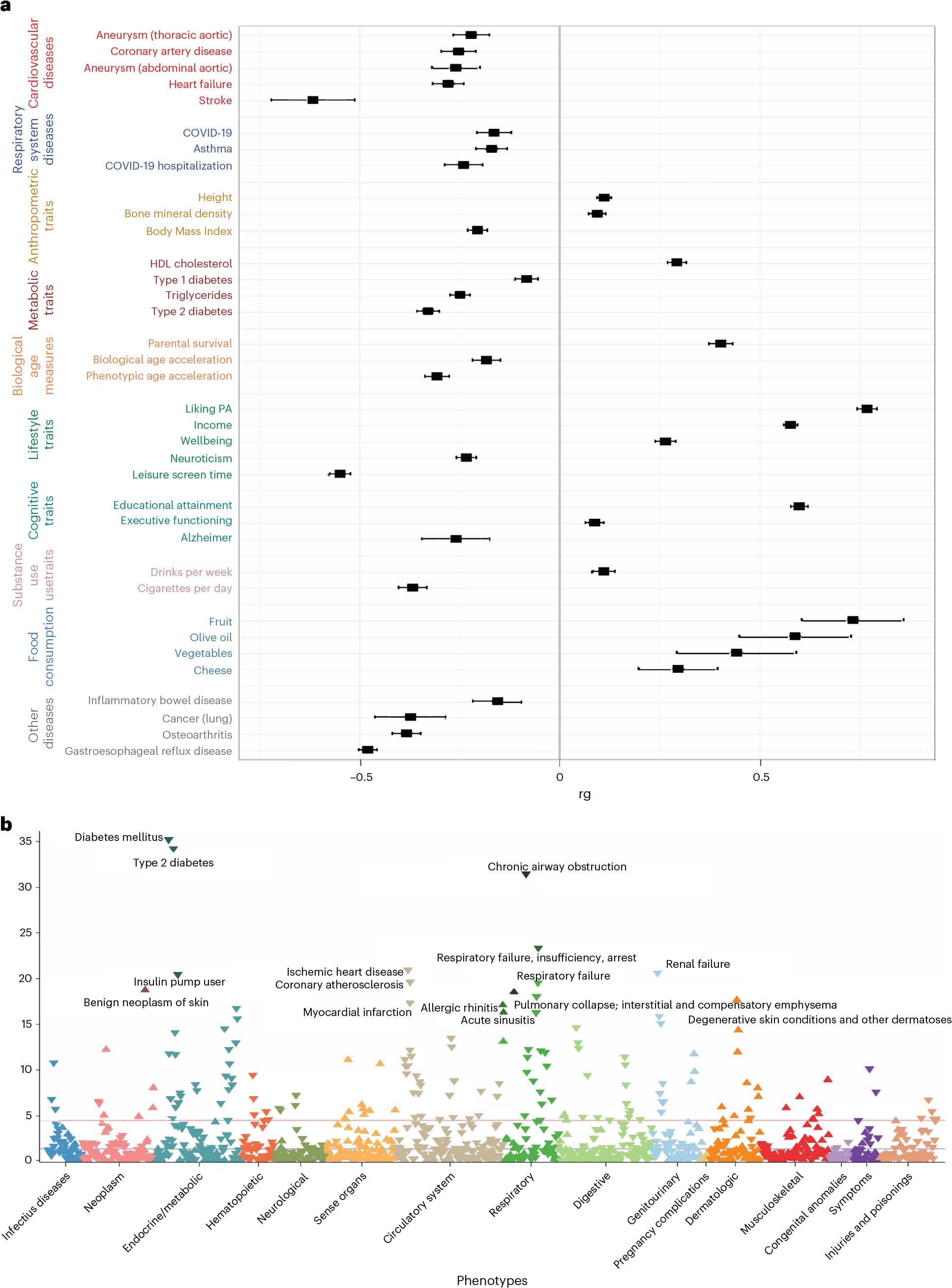

Using data from the Million Veteran Program (MVP), a genetic biobank run by the U.S. Department of Veterans Affairs, the researchers analyzed genetic influences on leisure, work, and home-time physical activity. They wanted to understand how genetics impacts these three types of physical activity and compare their health benefits.

The study included nearly 190,000 individuals of European ancestry, 27,044 of African ancestry, and 10,263 of Latin-American ancestry. To study the genetics of physical activity during leisure time, the researchers also added data from the UK Biobank, which included about 350,000 individuals.