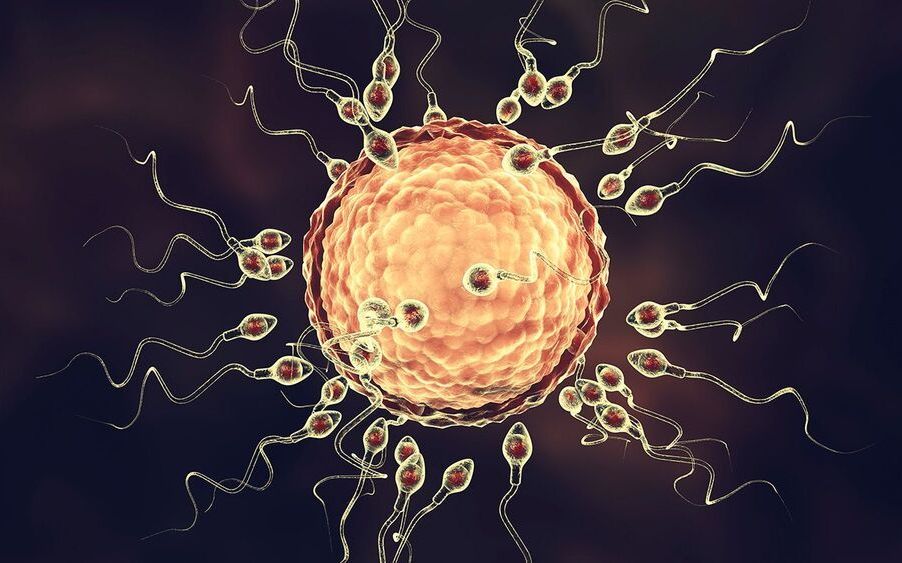

Biological systems are dynamical, constantly exchanging energy and matter with the environment in order to maintain the non-equilibrium state synonymous with living. Developments in observational techniques have allowed us to study biological dynamics on increasingly small scales. Such studies have revealed evidence of quantum mechanical effects, which cannot be accounted for by classical physics, in a range of biological processes. Quantum biology is the study of such processes, and here we provide an outline of the current state of the field, as well as insights into future directions.

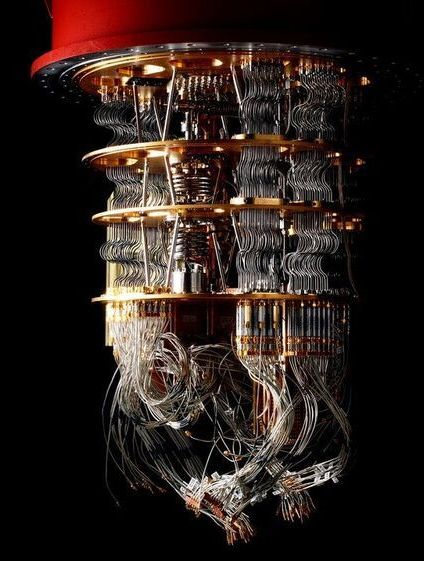

Quantum mechanics is the fundamental theory that describes the properties of subatomic particles, atoms, molecules, molecular assemblies and possibly beyond. Quantum mechanics operates on the nanometre and sub-nanometre scales and is at the basis of fundamental life processes such as photosynthesis, respiration and vision. In quantum mechanics, all objects have wave-like properties, and when they interact, quantum coherence describes the correlations between the physical quantities describing such objects due to this wave-like nature.

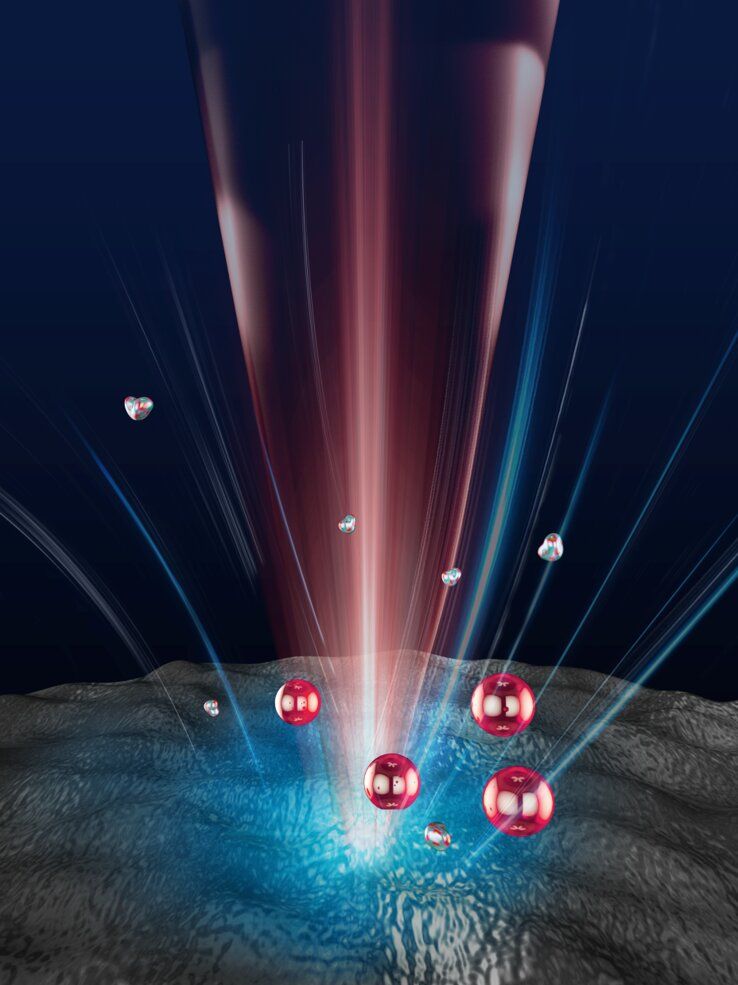

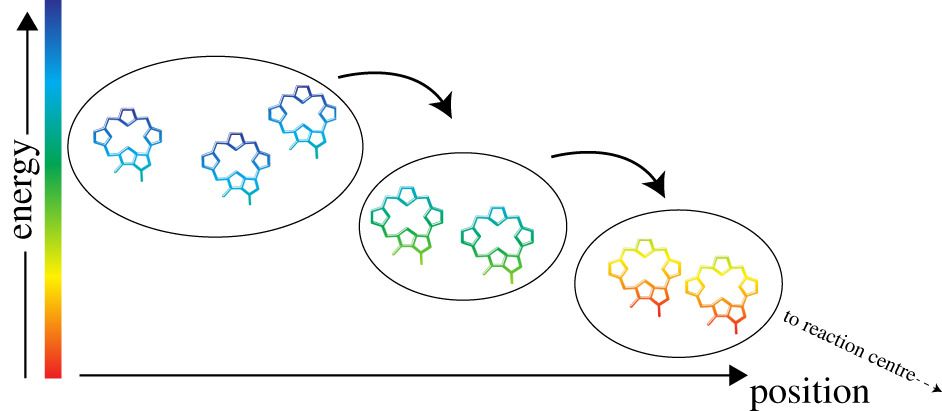

In photosynthesis, respiration and vision, the models that have been developed in the past are fundamentally quantum mechanical. They describe energy transfer and electron transfer in a framework based on surface hopping. The dynamics described by these models are often ‘exponential’ and follow from the application of Fermi’s Golden Rule [1, 2]. As a consequence of averaging the rate of transfer over a large and quasi-continuous distribution of final states the calculated dynamics no longer display coherences and interference phenomena. In photosynthetic reaction centres and light-harvesting complexes, oscillatory phenomena were observed in numerous studies performed in the 1990s and were typically ascribed to the formation of vibrational or mixed electronic–vibrational wavepackets.