Gesture interface company Leap Motion is announcing an ambitious, but still very early, plan for an augmented reality platform based on its hand tracking system. The system is called Project North Star, and it includes a design for a headset that Leap Motion claims costs less than $100 at large-scale production. The headset would be equipped with a Leap Motion sensor, so users could precisely manipulate objects with their hands — something the company has previously offered for desktop and VR displays.

Project North Star isn’t a new consumer headset, nor will Leap Motion be selling a version to developers at this point. Instead, the company is releasing the necessary hardware specifications and software under an open source license next week. “We hope that these designs will inspire a new generation of experimental AR systems that will shift the conversation from what an AR system should look like, to what an AR experience should feel like,” the company writes.

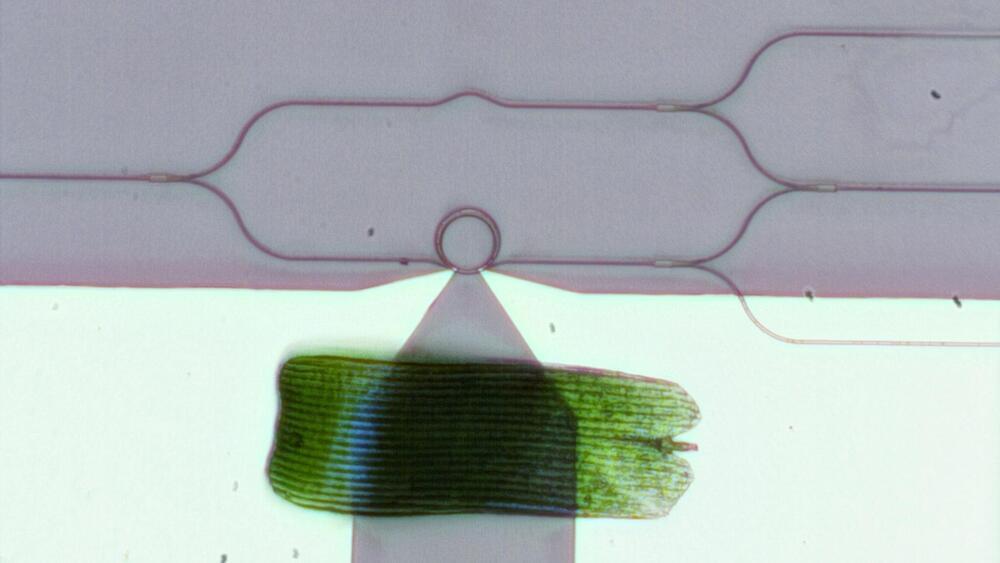

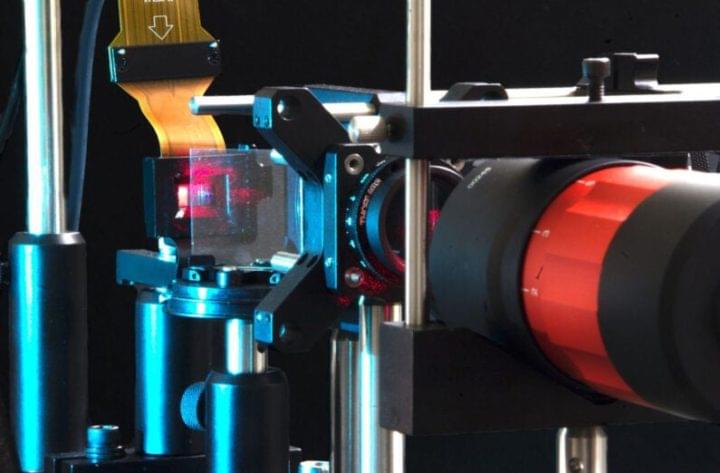

The headset design uses two fast-refreshing 3.5-inch LCD displays with a resolution of 1600×1440 per eye. The displays reflect their light onto a visor that the user perceives as a transparent overlay. Leap Motion says this offers a field of view that’s 95 degrees high and 70 degrees wide, larger than most AR systems that exist today. The Leap Motion sensor fits above the eyes and tracks hand motion across a far wider field of view, around 180 degrees horizontal and vertical.