Neural networks are distributed computing structures inspired by the structure of a biological brain and aim to achieve cognitive performance comparable to that of humans but in a much shorter time.

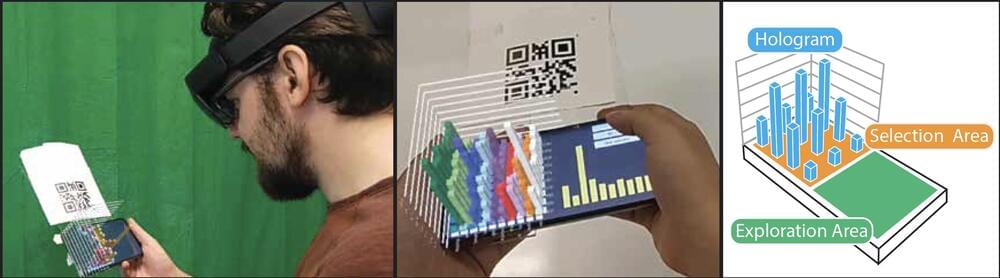

These technologies now form the basis of machine learning and artificial intelligence systems that can perceive the environment and adapt their own behavior by analyzing the effects of previous actions and working autonomously. They are used in many areas of application, such as speech and image recognition and synthesis, autonomous driving and augmented reality systems, bioinformatics, genetic and molecular sequencing, and high-performance computing technologies.

Compared to conventional computing approaches, in order to perform complex functions, neural networks need to be initially “trained” with a large amount of known information that the network then uses to adapt by learning from experience. Training is an extremely energy-intensive process and as computing power increases, the neural networks’ consumption grows very rapidly, doubling every six months or so.