Large language models (LLMs), the most renowned of which is ChatGPT, have become increasingly better at processing and generating human language over the past few years. The extent to which these models emulate the neural processes supporting language processing by the human brain, however, has yet to be fully elucidated.

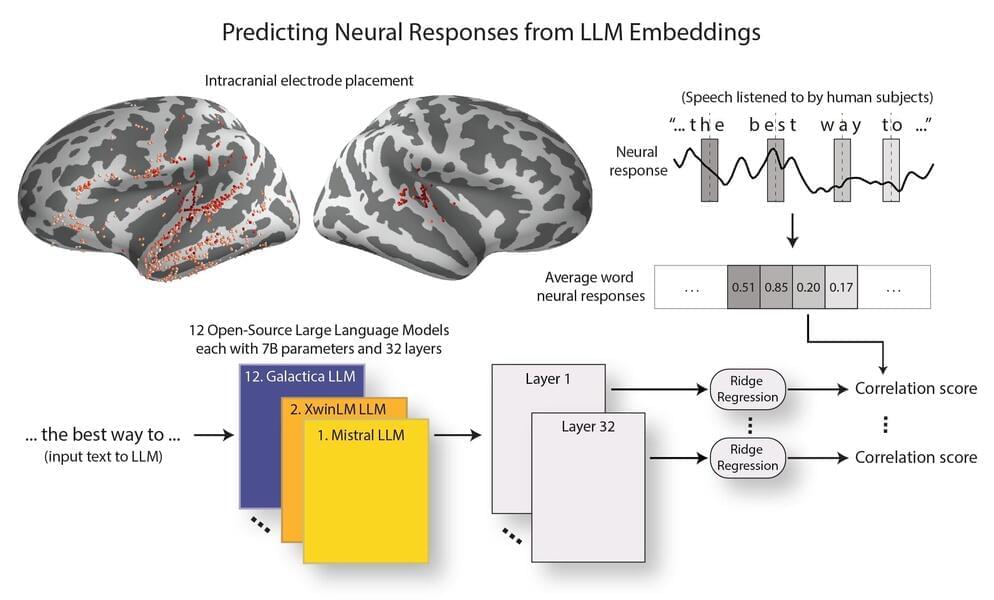

Researchers at Columbia University and Feinstein Institutes for Medical Research Northwell Health recently carried out a study investigating the similarities between LLM representations on neural responses. Their findings, published in Nature Machine Intelligence, suggest that as LLMs become more advanced, they do not only perform better, but they also become more brain-like.

“Our original inspiration for this paper came from the recent explosion in the landscape of LLMs and neuro-AI research,” Gavin Mischler, first author of the paper, told Tech Xplore.