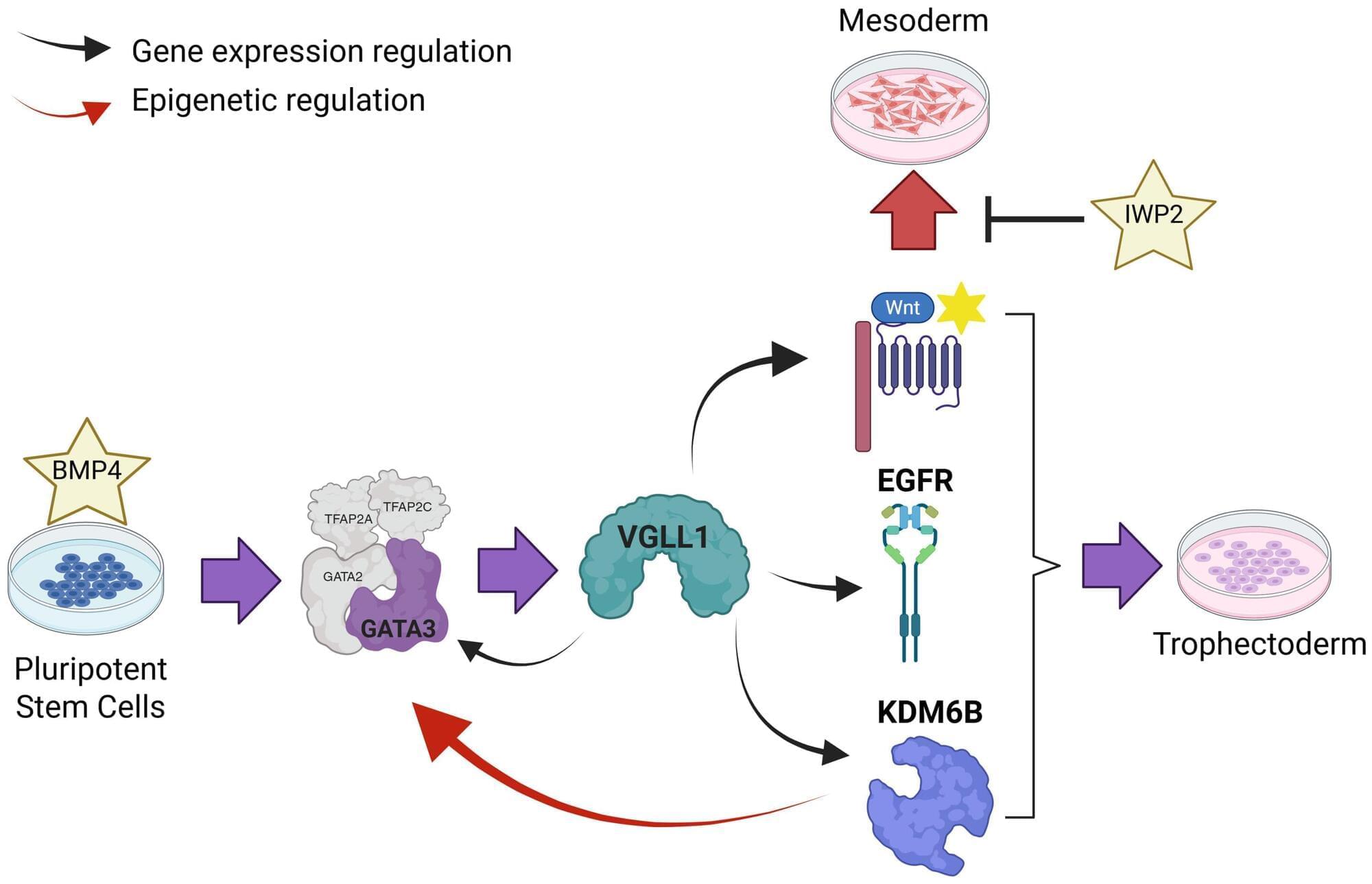

A gene that turns on very early in embryonic development could be key to the formation of the placenta, which provides the developing fetus with what it needs to thrive during gestation.

The placenta provides all of the nutrition, oxygen and antibodies that a developing human fetus needs to thrive throughout gestation. The temporary organ begins to form within six to 12 days after conception, just as the embryo implants itself in the lining of the uterus. Failure of the placenta to form correctly is the second leading cause of miscarriage during early pregnancy, after genetic abnormalities of the fetus that are incompatible with life.

However, the initial stages of placental formation have remained a mystery due to ethical considerations and technical constraints on studying the process in humans.