Even the CIA has publicly released data on the psychic phenomenon.

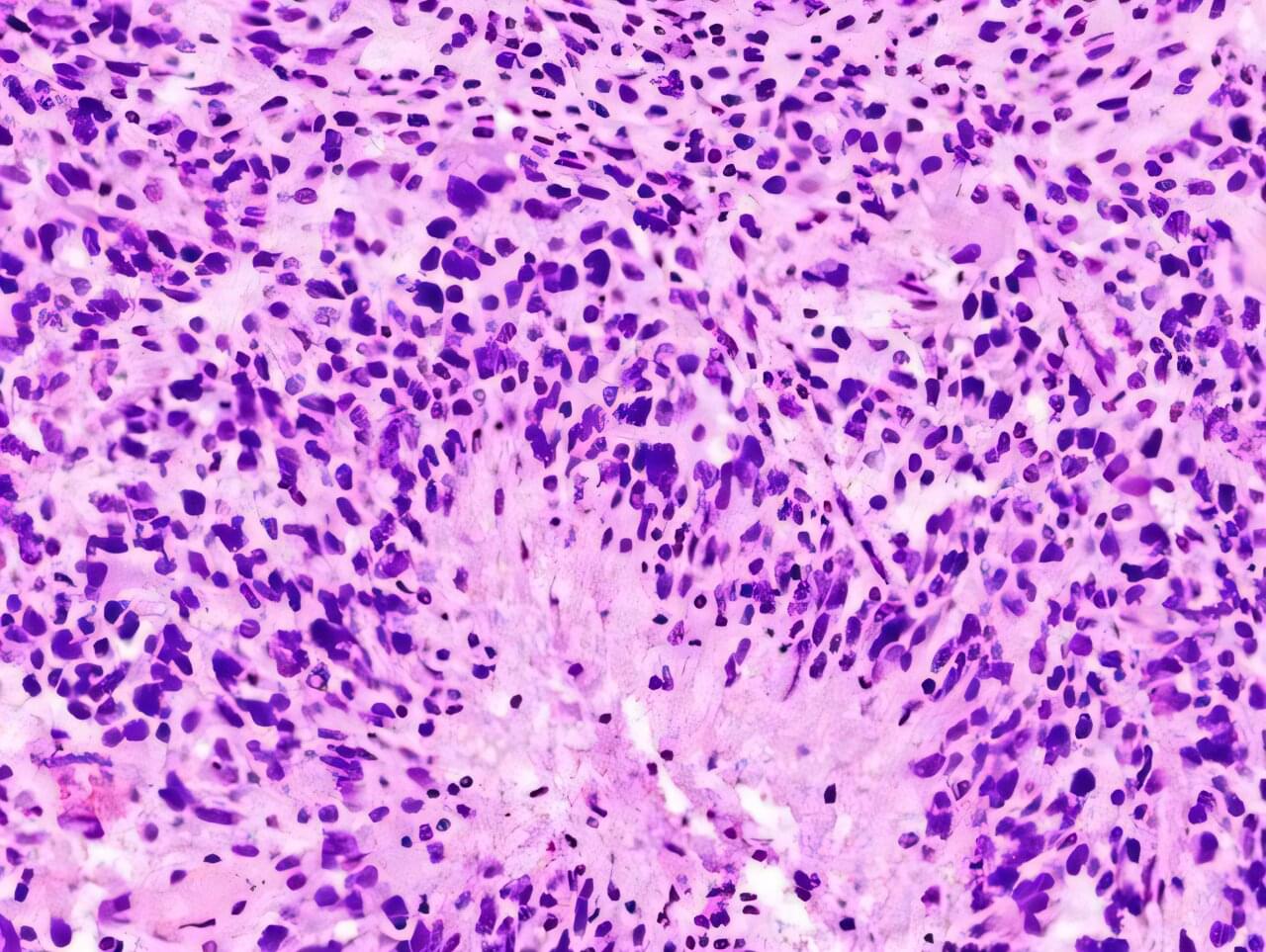

Research by University of Sydney scientists has uncovered a mechanism that may explain why glioblastoma returns after treatment, offering new clues for future therapies which they will now investigate as part of an Australian industry collaboration.

Glioblastoma is one of the deadliest brain cancers, with a median survival rate of just 15 months. Despite surgery and chemotherapy, more than 1,250 clinical trials over the past 20 years have struggled to improve survival rates.

Published in Nature Communications, the study shows that a small population of drug-tolerant cells known as “persister cells” rewires its metabolism to survive chemotherapy, using an unexpected ally as an invisibility cloak: a fertility gene called PRDM9.

Science has a rich tradition of physics by imagination. From the 16th century, scientists and philosophers have conjured ‘demons’ that test the limits of our strongest theories of reality.

Three stand out today: Laplace’s demon, capable of perfectly predicting the future; Loschmidt’s demon, which could reverse time and violate the second law of thermodynamics; and Maxwell’s demon, which create a working heat engine at no cost.

Though imaginary, these paradoxical beings have pushed physicists towards sharper theories. From quantum theory to thermodynamics, these demons have legacies that we still feel today.

Image: Antonio Sortino

Three thought experiments involving “demons” have haunted physics for centuries. What should we make of them today?

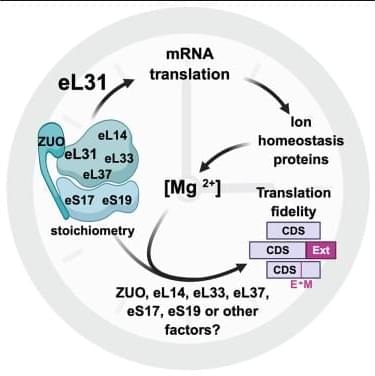

Lamb et al. show that the circadian clock rhythmically remodels ribosome composition in Neurospora crassa. Clock-regulated incorporation of the ribosomal protein eL31 is required for rhythmic translation and translation fidelity, linking temporal ribosome remodeling to daily changes in proteome diversity.

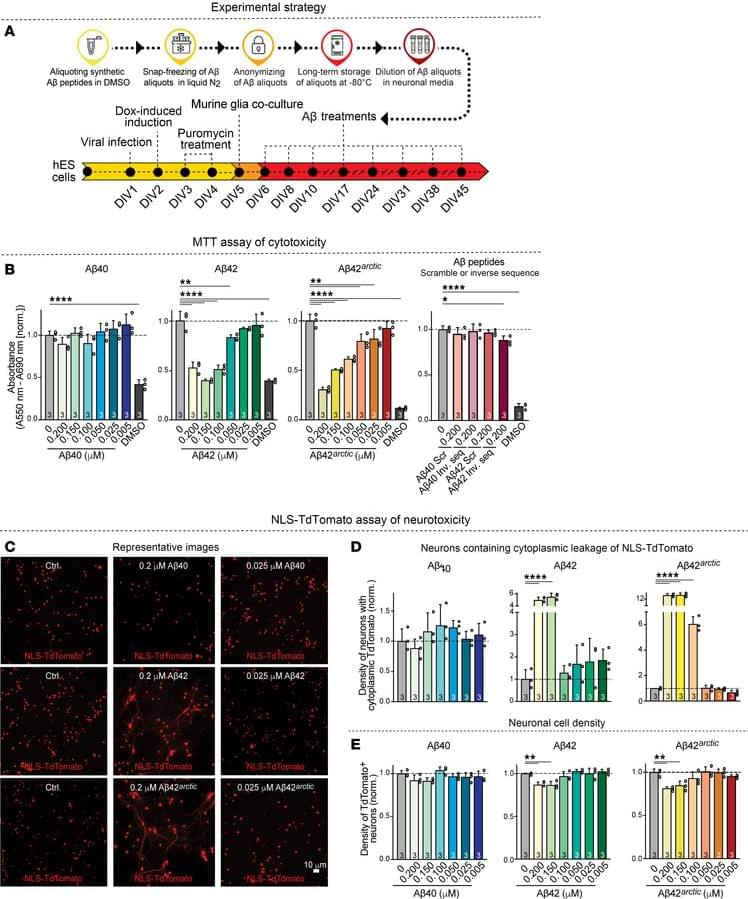

This issue’s cover features work by Alberto Siddu & team on the promotion of synapse formation in human neurons by free amyloid-beta peptides, in contrast to aggregated forms that are synaptotoxic:

The image shows a human induced neuron exposed to a nontoxic concentration of amyloid-beta42 peptide, revealing enhanced synaptogenesis, visible as synaptic puncta along the dendritic arbor.

Address correspondence to: Alberto Siddu, Lorry Lokey Stem Cell Building, 265 Campus Dr., Room G1015, Stanford, California 94,305, USA. Phone: 650.721.1418; Email: [email protected]. Or to: Thomas C. Südhof, Lorry Lokey Stem Cell Building, 265 Campus Dr., Room G1021, Stanford, California 94,305, USA. Phone: 650.721.1418; Email: [email protected].

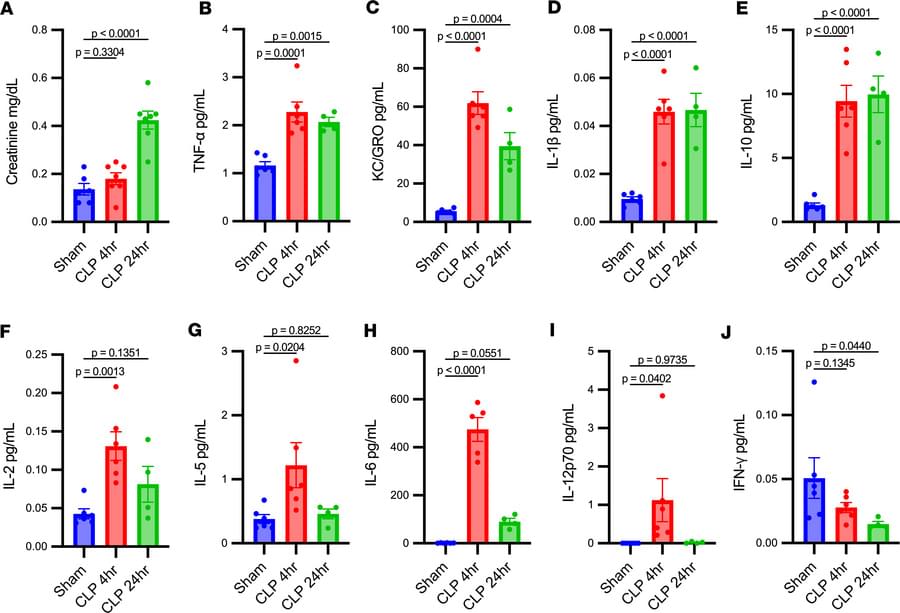

Using a new strategy for quantifying mitochondrial DNA, Mark L. Hepokoski & team show the release of mtDNA from the kidney directly contributes to interleukin-6 release during sepsis associated AKI.

1VA San Diego Healthcare System, San Diego, California, USA.

2Division of Pulmonary and Critical Care and Sleep Medicine, UCSD, La Jolla, California, USA.

3Department of Critical Care Medicine, Yantai Yuhuangding Hospital, Affiliated with Medical College of Qingdao University, Yantai, Shandong, China.

It’s well known that exercise is good for health and helps to prevent serious diseases, like cancer and heart disease, along with simply making people feel better overall. However, the molecular mechanisms responsible for preventing cancer or slowing its progression are not well understood. But, a new study, published in the Proceedings of the National Academy of Sciences, reveals how exercise can increase glucose and oxygen uptake in the skeletal and cardiac muscles, instead of allowing it to “feed” tumors.

Reduced tumor growth in exercised mice To study how exercise-induced metabolic changes affect tumor growth, the research team injected mice with breast cancer cells and fed some of the mice a high-fat diet (HFD), consisting of 60% calories from fat, while others were fed a normal diet as a control. The HFD mice were given running wheels for exercise, although exercise was voluntary. The team used stable isotope tracer studies [U-13C6] glucose and [U-13C5] glutamine to track metabolic changes.

After 4 weeks of wheel running, the team found a significant difference in tumor sizes between mice that chose to exercise, compared to those that did not—even when they were fed the same diet.

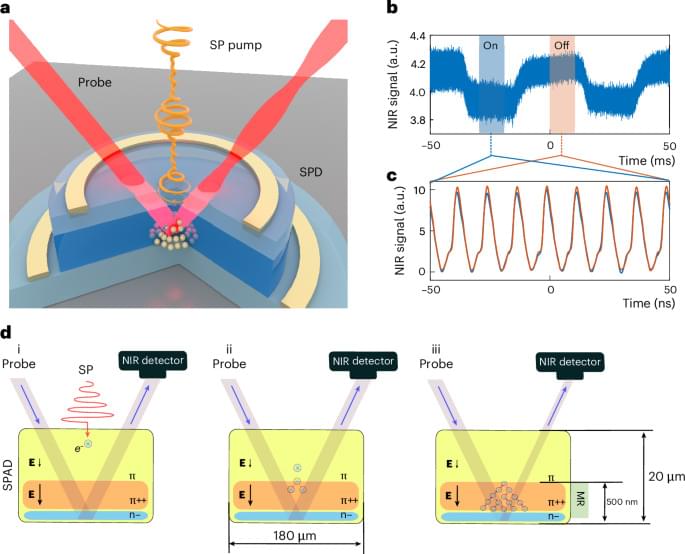

For a long time, this has been a major hurdle in optics. Light is an incredible tool for fast, efficient communication and futuristic quantum computers, but it’s notoriously hard to control at such delicate, “single-photon” levels.

Electron avalanche multiplication can enable an all-optical modulator controlled by single photons.