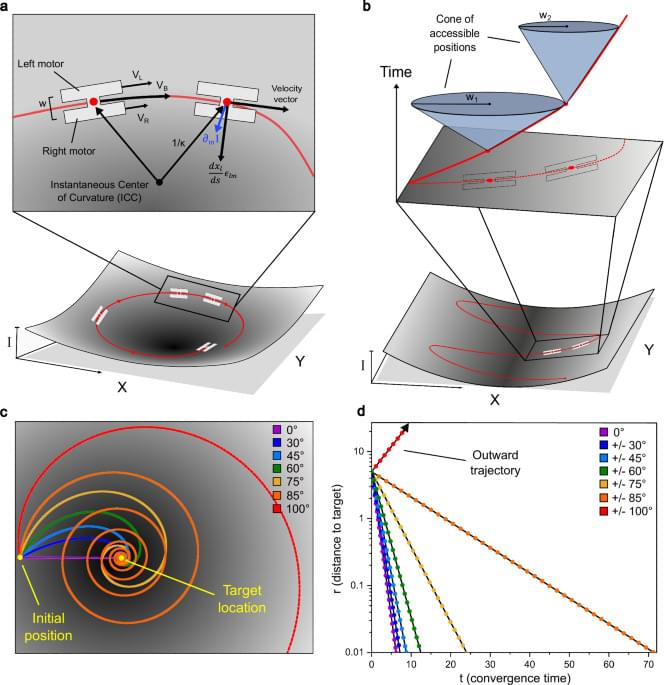

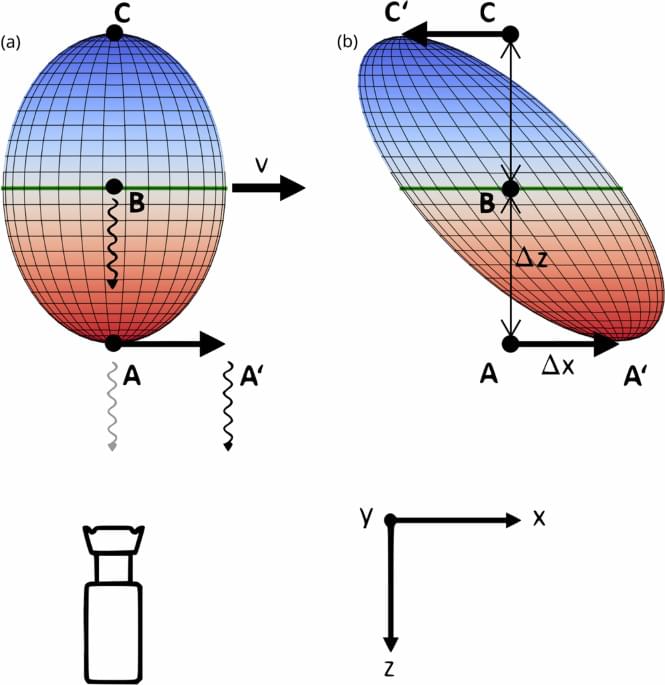

Not metaphorically—literally. The light intensity field becomes an artificial “gravity,” and the robot’s trajectory becomes a null geodesic, the same path light takes in warped spacetime.

By calculating the robot’s “energy” and “angular momentum” (just like planetary orbits), they mathematically prove: robots starting within 90 degrees of a target will converge exponentially, every time. No simulations or wishful thinking—it’s a theorem.

They use the Schwarz-Christoffel transformation (a tool from black hole physics) to “unfold” a maze onto a flat rectangle, program a simple path, then “fold” it back. The result: a single, static light pattern that both guides robots and acts as invisible walls they can’t cross.

npj Robot ics — Artificial spacetimes for reactive control of resource-limited robots. npj Robot 3, 39 (2025). https://doi.org/10.1038/s44182-025-00058-9