Discover how St. Jude research uncovered an unknown cell death pathway triggered by innate immune activation and nutrient scarcity that may help treat cancer.

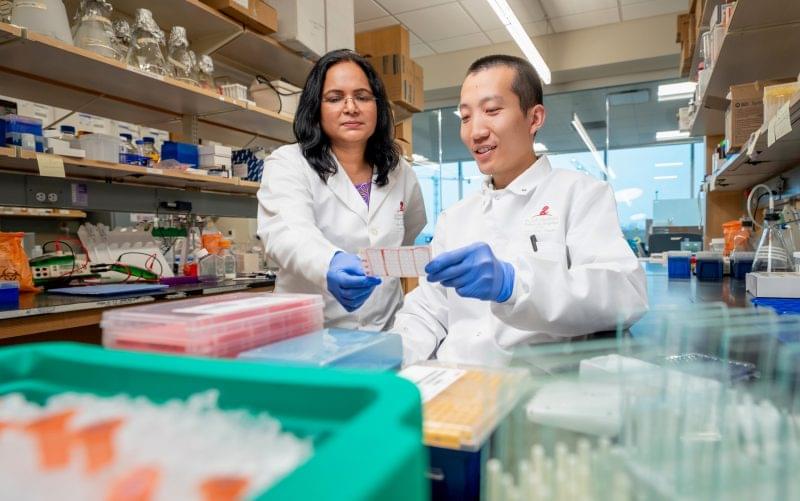

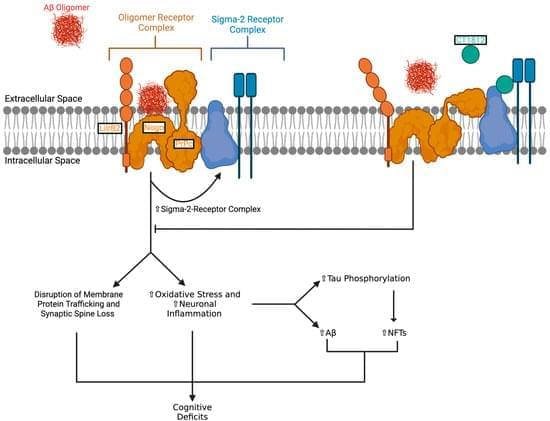

Alzheimer’s disease (AD) is a neurodegenerative disease marked by the accumulation of toxic amyloid-beta (Aβ) oligomers. These oligomers are thought to cause synaptic dysfunction and contribute to neurodegeneration. CT1812 is a small-molecule sigma-2 receptor antagonist that is currently being investigated and tested as a potential disease-modifying treatment for AD. CT1812 acts by displacing Aβ oligomers into the cerebrospinal fluid and preventing their interaction with receptors on neurons. Preclinical studies and early clinical trials of CT1812 show promising results and provide evidence for its potential to slow AD progression. This review outlines the role of Aβ oligomers in AD, CT1812’s mechanism of action, and the effectiveness and limitations of CT1812 based on preclinical and clinical studies.

People who treat others with compassion often feel more at ease themselves. This is the key finding of a new study by Majlinda Zhuniq, Dr. Friedericke Winter, and Professor Corina Aguilar-Raab from the University of Mannheim. Their study was recently published in the journal Scientific Reports.

While the link between self-compassion and well-being is well established, this effect has hardly been researched with respect to compassion for others. In a meta-analysis, the research team analyzed data from more than 40 individual studies.

The results showed that people who empathize with others, support them, or want to help them report greater overall life satisfaction, experience more joy, and see more meaning in life. On average, these people’s psychological well-being was higher. The link between compassion and a reduction in negative feelings, such as stress or sadness, was weaker. However, slight positive trends could also be seen in this respect.

Gemini North captured new images of Comet 3I/ATLAS after it reemerged from behind the sun on its path out of the solar system. The data were collected during a Shadow the Scientists session—a unique outreach initiative that invites students around the world to join researchers as they observe the universe on the world’s most advanced telescopes.

On 26 November 2025, scientists used the Gemini Multi-Object Spectrograph (GMOS) on Gemini North at Maunakea in Hawai’i to obtain images of the third-ever detected interstellar object, Comet 3I/ATLAS. The new observations reveal how the comet has changed after making its closest approach to the sun. Gemini North is one half of the International Gemini Observatory and operated by NOIRLab.

After emerging from behind the sun, 3I/ATLAS reappeared in the sky close to Zaniah, a triple-star system located in the constellation Virgo. These observations were taken as part of a public outreach initiative organized by NOIRLab in collaboration with Shadow the Scientists, an initiative created to connect the public with scientists to engage in authentic scientific experiments, such as astronomy observing experiences on world-class telescopes. The scientific program was led by Bryce Bolin, a research scientist from Eureka Scientific.

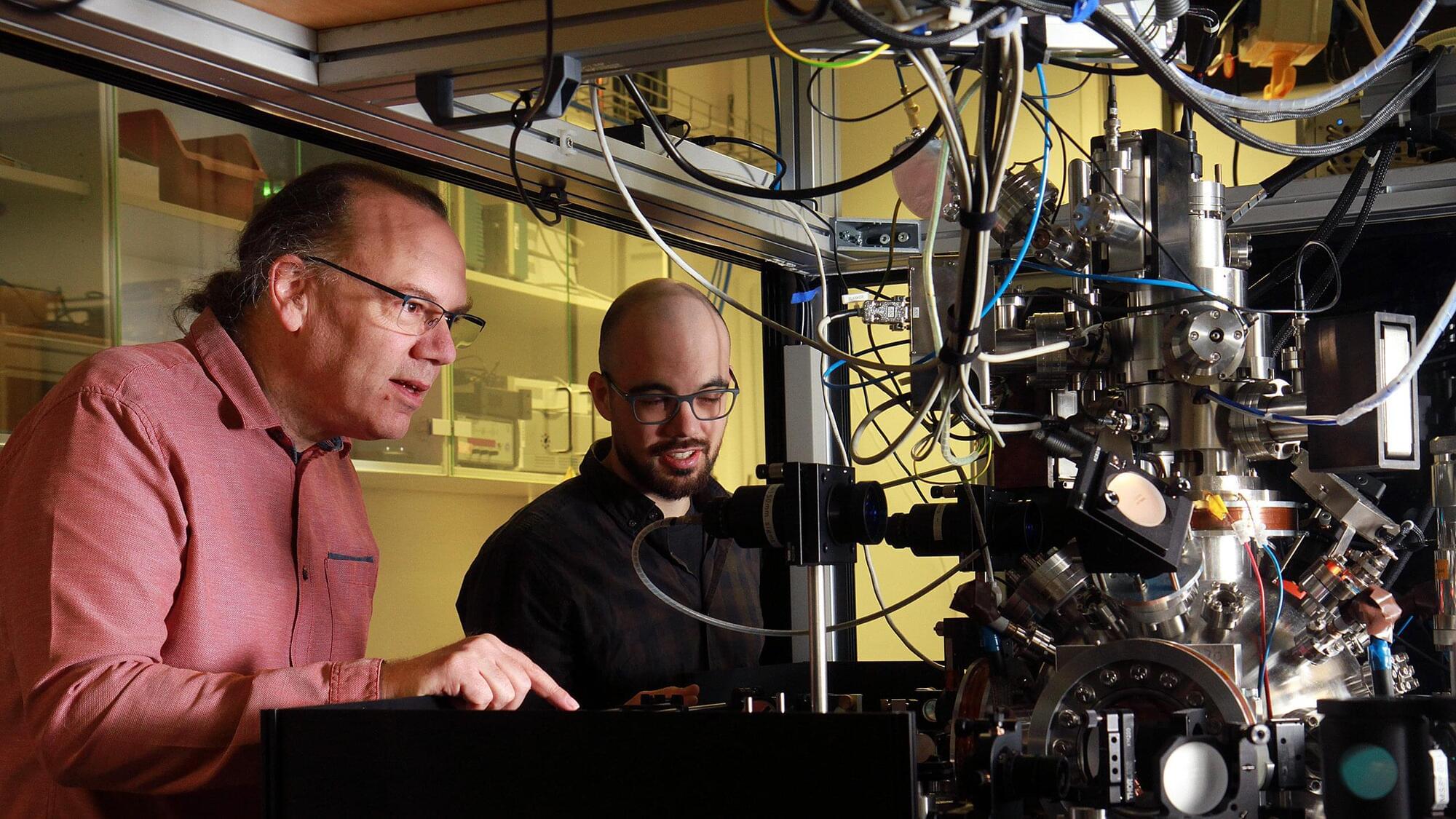

The microscopic processes taking place in superconductors are difficult to observe directly. Researchers at the RPTU University of Kaiserslautern-Landau have therefore implemented a quantum simulation of the Josephson effect: They separated two Bose-Einstein condensates (BECs) by means of an extremely thin optical barrier.

The characteristic Shapiro steps were observed in the atomic system. The research was published in the journal Science.

Two superconductors separated by a wafer-thin insulating layer—that’s how simple a Josephson junction looks. But despite its simple structure, it harbors a quantum mechanical effect that is now one of the most important tools of modern technology: Josephson contacts form the heart of many quantum computers and enable high-precision measurements—such as the measurement of very weak magnetic fields.

Femtosecond laser-induced periodic surface structures can be used to control thermal conductivity in thin film solids, report researchers from Japan. Their innovative method, which leverages high-speed laser ablation, produces parallel nanoscale grooves with unprecedented throughput that is 1,000 times stronger than conventional approaches, strategically altering phonon scattering in the material.

This scalable and semiconductor-ready approach could make it possible to mass-produce thermal engineering structures while maintaining laboratory-level precision.

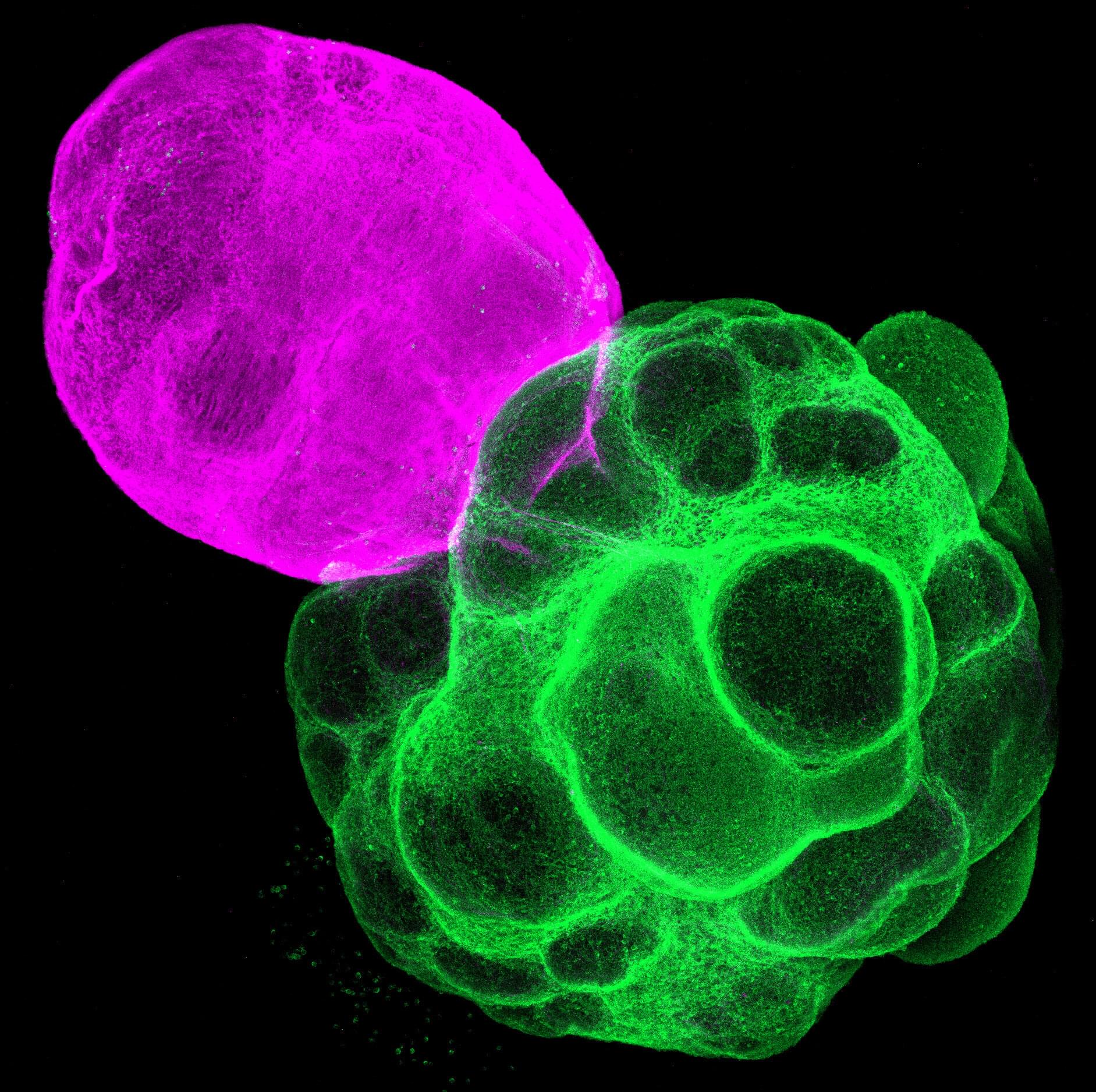

A Japanese research team has successfully reproduced the human neural circuit in vitro using multi-region miniature organs known as assembloids, which are derived from induced pluripotent stem (iPS) cells. With this circuit, the team demonstrated that the thalamus plays a crucial role in shaping cell type-specific neural circuits in the human cerebral cortex.

These findings were published in the journal Proceedings of the National Academy of Sciences.

Our brain’s cerebral cortex contains various types of neurons, and effective communication among these neurons and other brain regions is crucial for activating functions like perception and cognition.

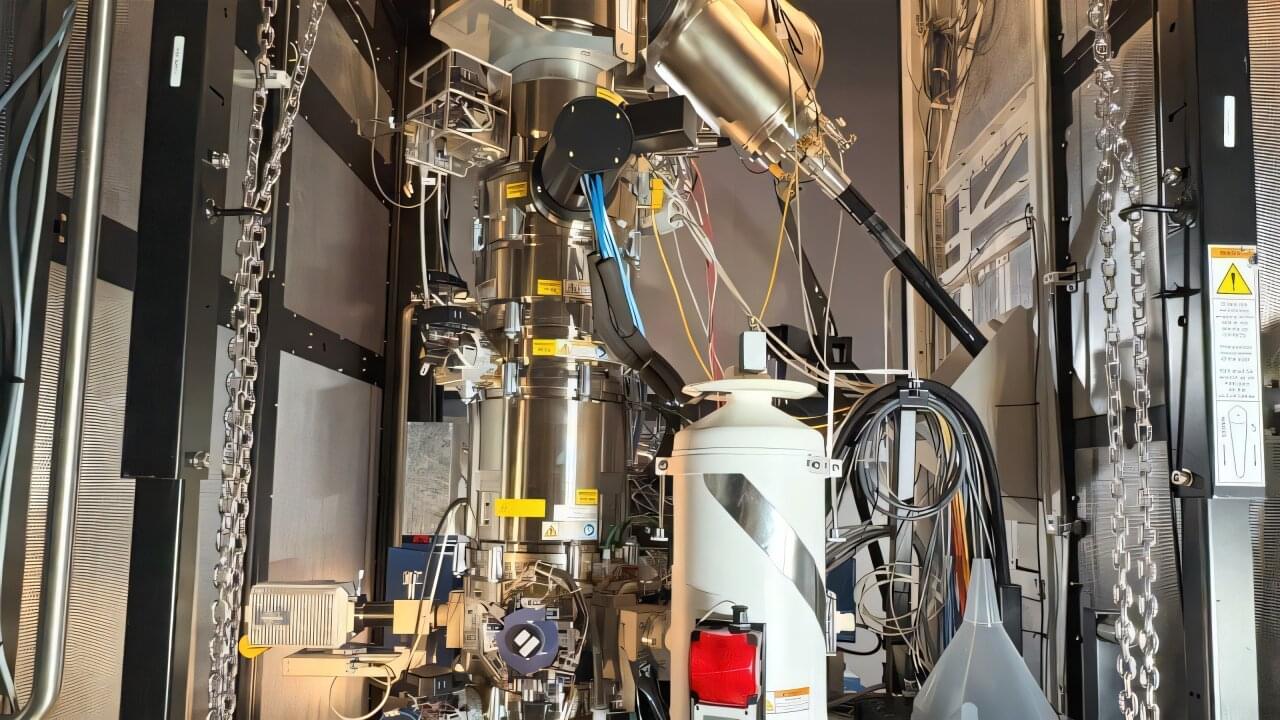

Researchers have discovered how to design and place single-photon sources at the atomic scale inside ultrathin 2D materials, lighting the path for future quantum innovations.

Like perfectly controlled light switches, quantum emitters can turn on the flow of single particles of light, called photons, one at a time. These tiny switches—the “bits” of many quantum technologies—are created by atomic-scale defects in materials.

Their ability to produce light with such precision makes them essential for the future of quantum technologies, including quantum computing, secure communication and ultraprecise sensing. But finding and controlling these atomic light switches has been a major scientific challenge—until now.

Researchers at LMU have uncovered how ribosomes, the cell’s protein builders, also act as early warning sensors when something goes wrong inside a cell.

When protein production is disrupted, and ribosomes begin to collide, a molecule called ZAK detects the pileup and switches on protective stress responses.

Ribosomes as protein builders and stress sensors.