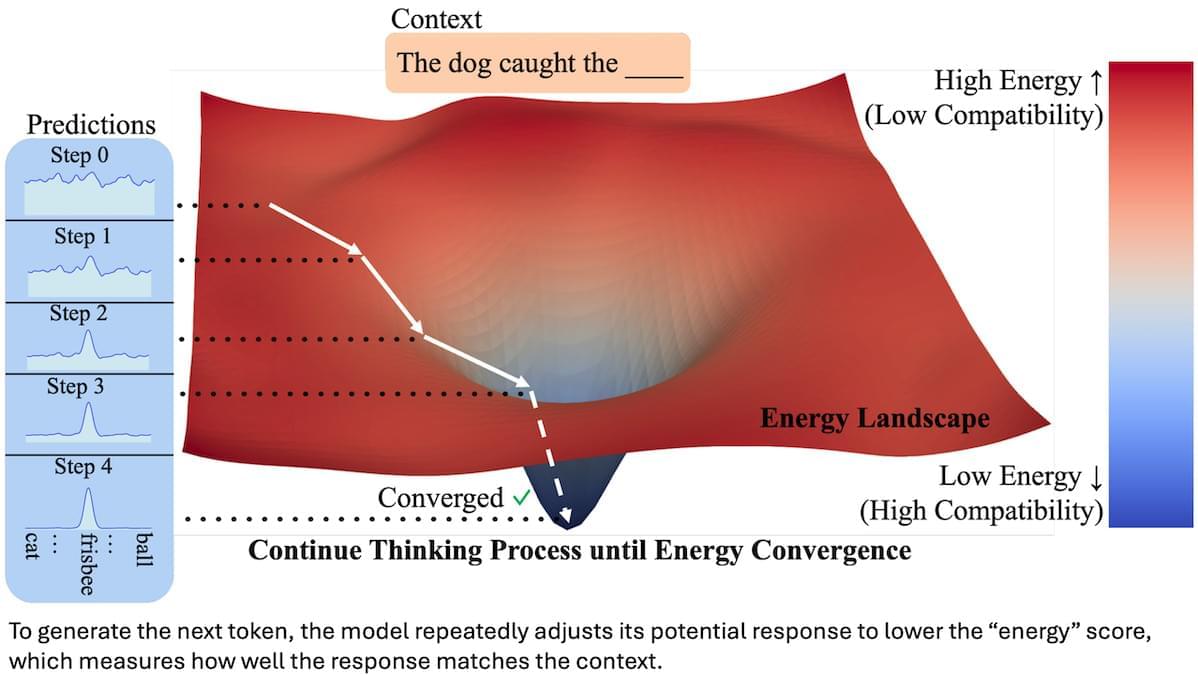

Inside the microchips powering your devices, atoms aren’t just randomly scattered. They follow a hidden order that can change how semiconductors behave.

A team of researchers from the Lawrence Berkeley National Laboratory (Berkeley Lab) and George Washington University has, for the first time, observed these tiny patterns, called short-range order (SRO), directly in semiconductors.

This discovery is a game-changer, as understanding how atoms naturally arrange themselves could let researchers design materials with desirable electronic properties. Such control could revolutionize quantum computing, neuromorphic devices that mimic the brain, and advanced optical detectors.