Artificial intelligence (AI) is said to be a “black box,” with its logic obscured from human understanding—but how much does the average user actually care to know how AI works?

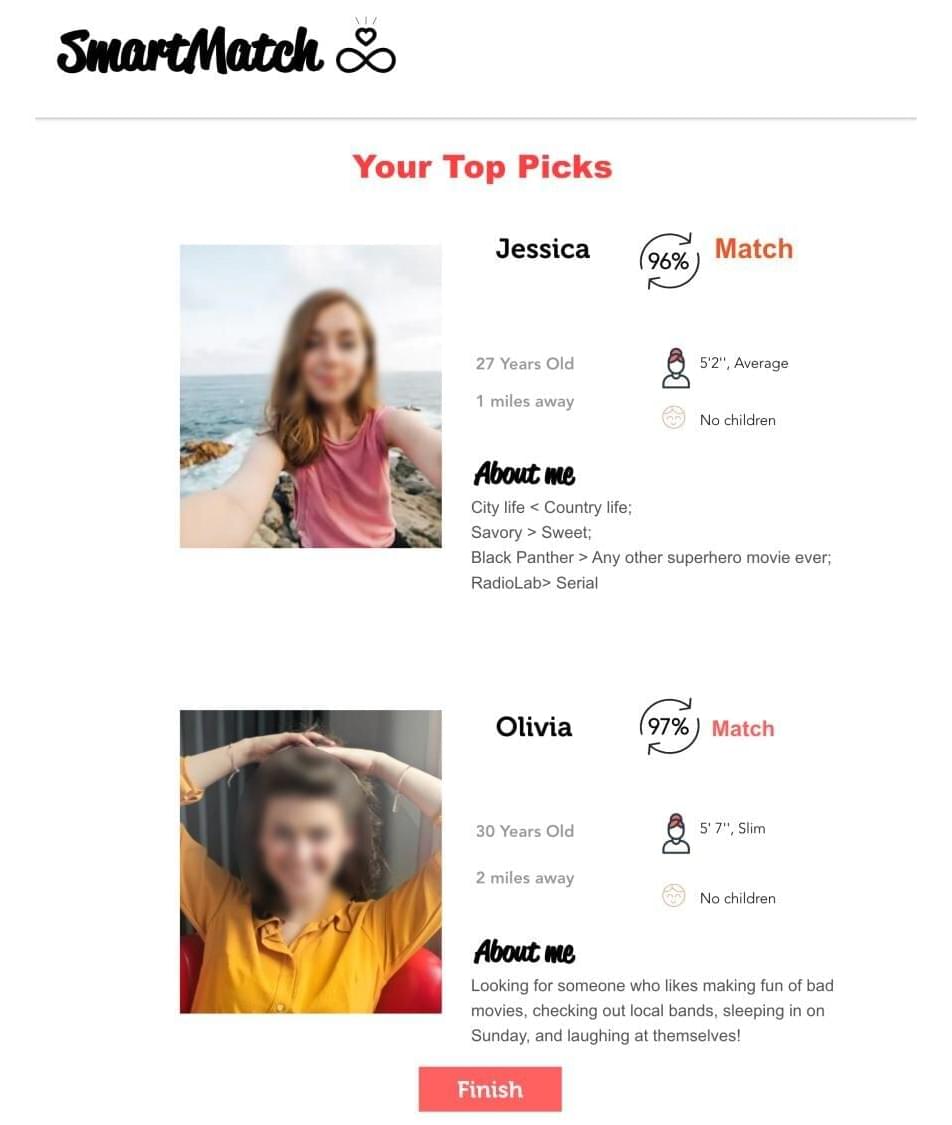

It depends on the extent to which a system meets users’ expectations, according to a new study by a team that includes Penn State researchers. Using a fabricated algorithm-driven dating website, the team found that whether the system met, exceeded or fell short of user expectations directly corresponded to how much the user trusted the AI and wanted to know about how it worked.

The findings are published in the journal Computers in Human Behavior.