Security vulnerabilities were uncovered in the popular open-source artificial intelligence (AI) framework Chainlit that could allow attackers to steal sensitive data, which may allow for lateral movement within a susceptible organization.

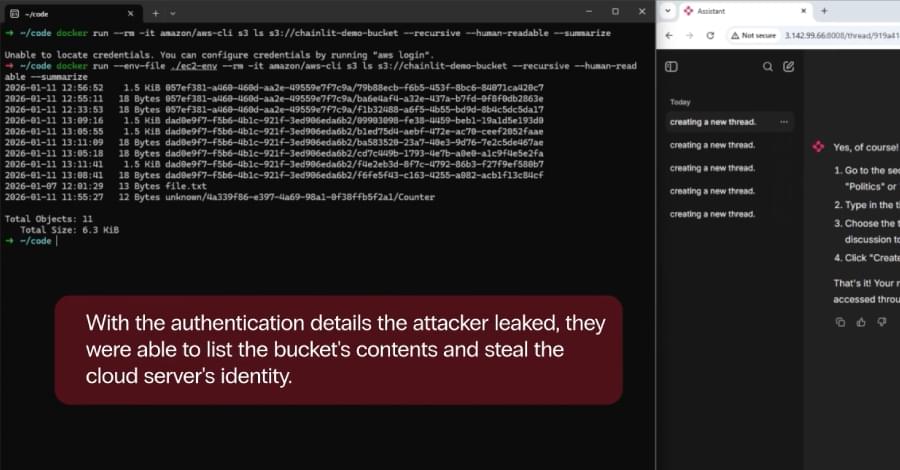

Zafran Security said the high-severity flaws, collectively dubbed ChainLeak, could be abused to leak cloud environment API keys and steal sensitive files, or perform server-side request forgery (SSRF) attacks against servers hosting AI applications.

Chainlit is a framework for creating conversational chatbots. According to statistics shared by the Python Software Foundation, the package has been downloaded over 220,000 times over the past week. It has attracted a total of 7.3 million downloads to date.