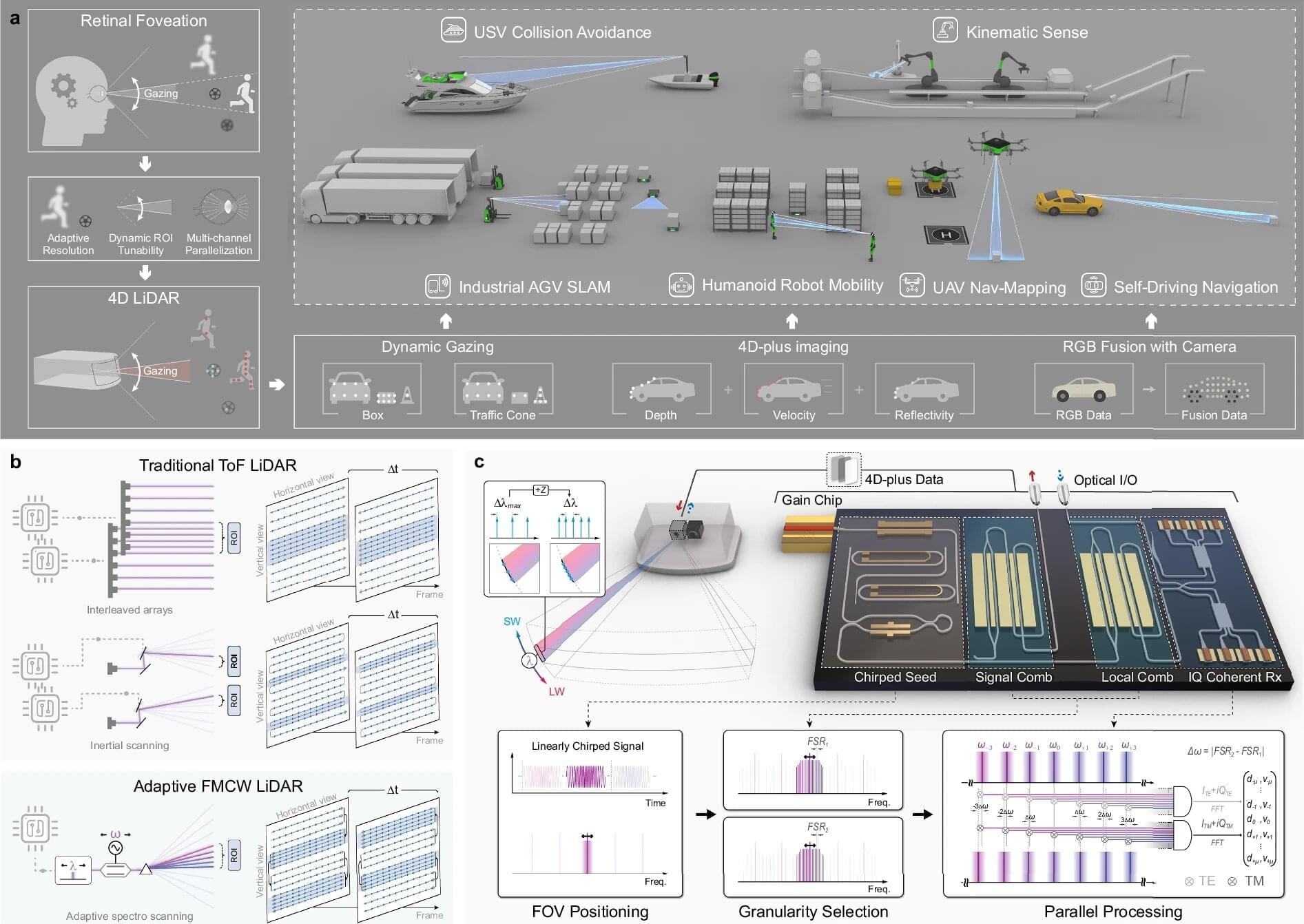

In a recent study, researchers from China have developed a chip-scale LiDAR system that mimics the human eye’s foveation by dynamically concentrating high-resolution sensing on regions of interest (ROIs) while maintaining broad awareness across the full field of view.

The study is published in the journal Nature Communications.

LiDAR systems power machine vision in self-driving cars, drones, and robots by firing laser beams to map 3D scenes with millimeter precision. The eye packs its densest sensors in the fovea (sharp central vision spot) and shifts gaze to what’s important. By contrast, most LiDARs use rigid parallel beams or scans that spread uniform (often coarse) resolution everywhere. Boosting detail means adding more channels uniformly, which explodes costs, power, and complexity.