For people, matching what they see on the ground to a map is second nature. For computers, it has been a major challenge. A Cornell research team has introduced a new method that helps machines make these connections—an advance that could improve robotics, navigation systems, and 3D modeling.

The work, presented at the 2025 Conference on Neural Information Processing Systems and published on the arXiv preprint server, tackles a major weakness in today’s computer vision tools. Current systems perform well when comparing similar images, but they falter when the views differ dramatically, such as linking a street-level photo to a simple map or architectural drawing.

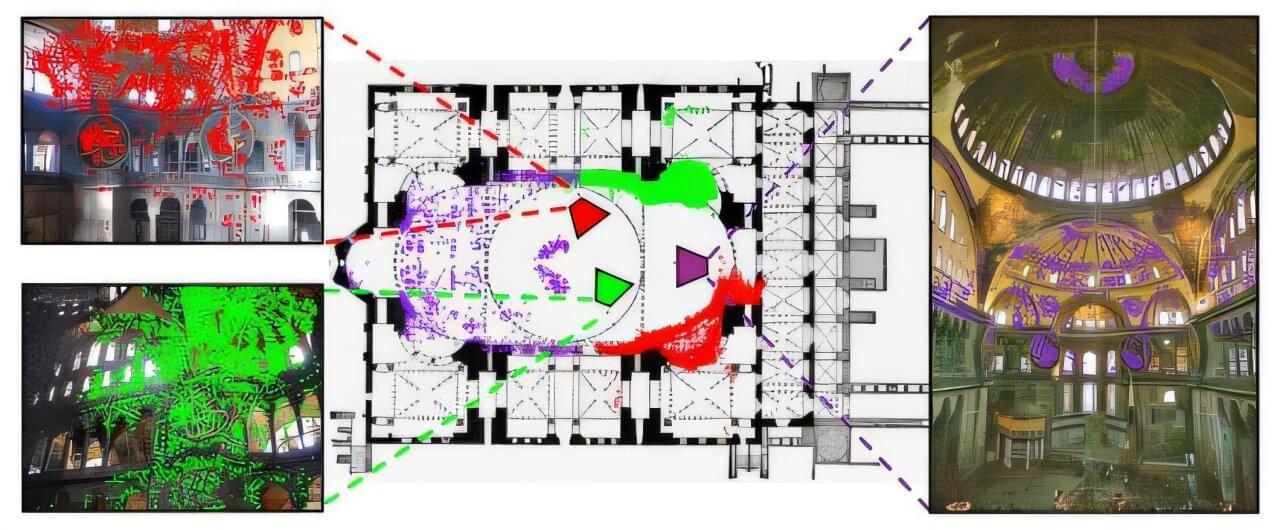

The new approach teaches machines to find pixel-level matches between a photo and a floor plan, even when the two look completely different. Kuan Wei Huang, a doctoral student in computer science, is the first author; the co-authors are Noah Snavely, a professor at Cornell Tech; Bharath Hariharan, an associate professor at the Cornell Ann S. Bowers College of Computing and Information Science; and undergraduate Brandon Li, a computer science student.