Chances are that you have unknowingly encountered compelling online content that was created, either wholly or in part, by some version of a Large Language Model (LLM). As these AI resources, like ChatGPT and Google Gemini, become more proficient at generating near-human-quality writing, it has become more difficult to distinguish between purely human writing from content that was either modified or entirely generated by LLMs.

This spike in questionable authorship has raised concerns in the academic community that AI-generated content has been quietly creeping into peer-reviewed publications.

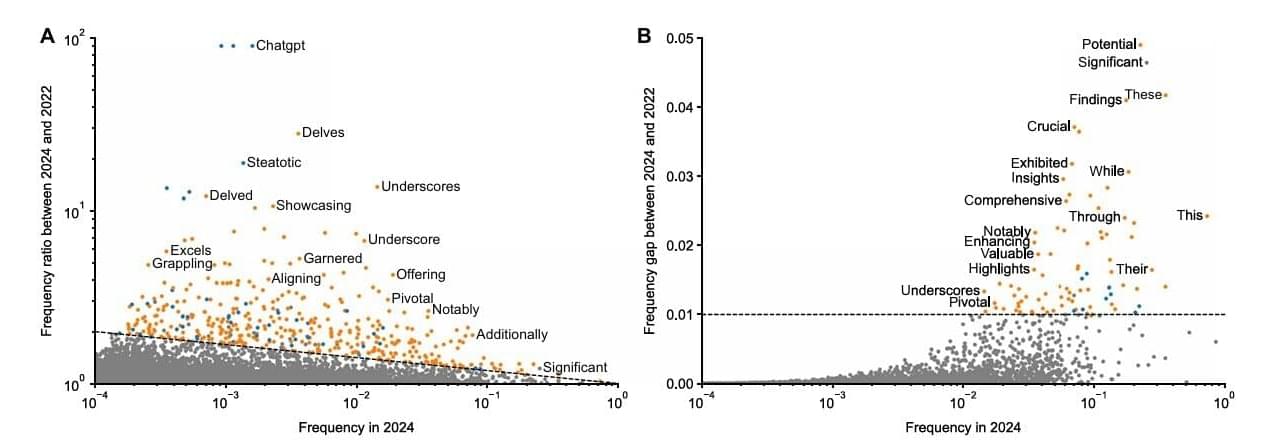

To shed light on just how widespread LLM content is in academic writing, a team of U.S. and German researchers analyzed more than 15 million biomedical abstracts on PubMed to determine if LLMs have had a detectable impact on specific word choices in journal articles.