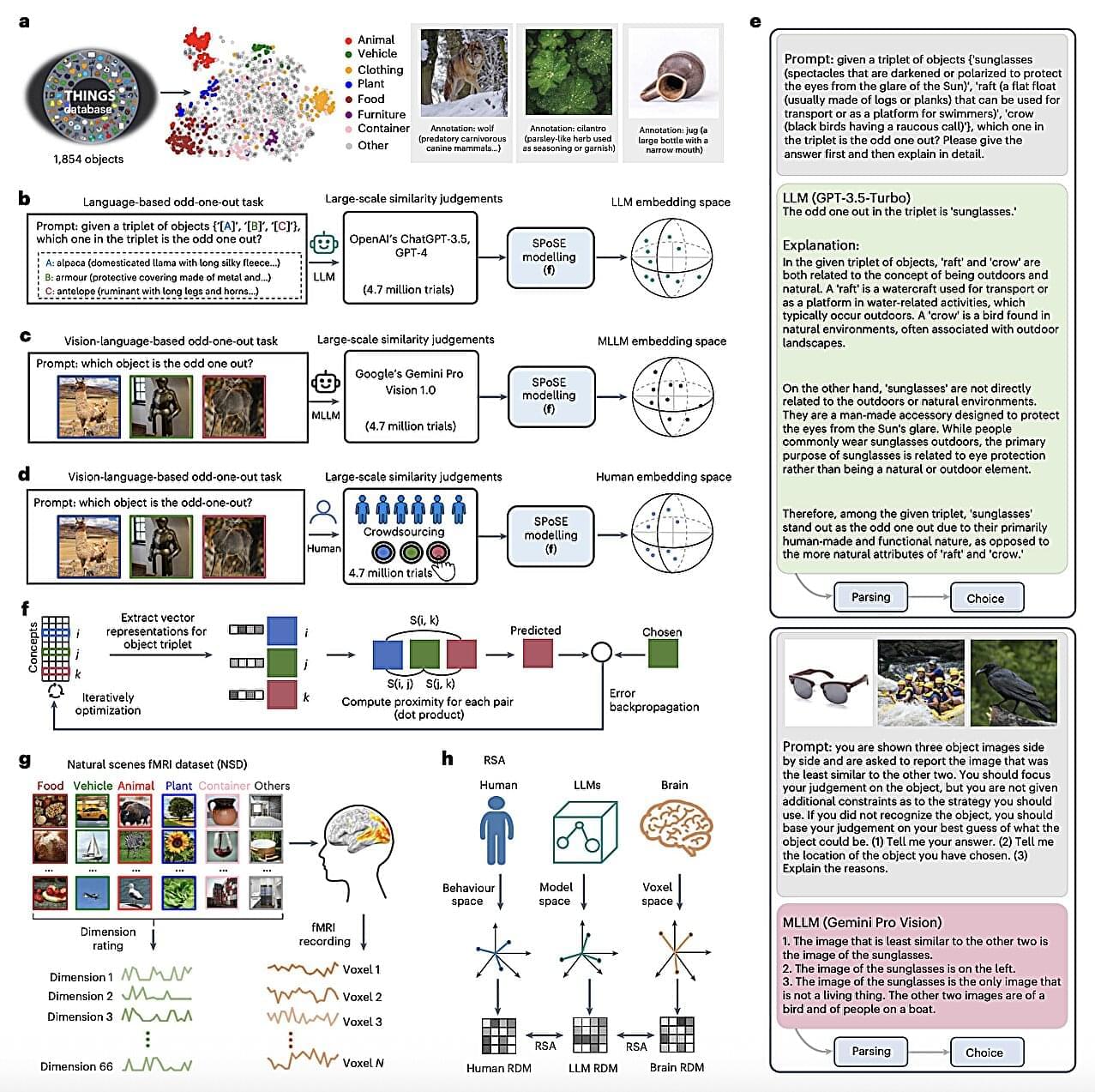

A better understanding of how the human brain represents objects that exist in nature, such as rocks, plants, animals, and so on, could have interesting implications for research in various fields, including psychology, neuroscience and computer science. Specifically, it could help shed new light on how humans interpret sensory information and complete different real-world tasks, which could also inform the development of artificial intelligence (AI) techniques that closely emulate biological and mental processes.

Multimodal large language models (LLMs), such as the latest models underpinning the functioning of the popular conversational platform ChatGPT, have been found to be highly effective computational techniques for the analysis and generation of texts in various human languages, images and even short videos.

As the texts and images generated by these models are often very convincing, to the point that they could appear to be human-created content, multimodal LLMs could be interesting experimental tools for studying the underpinnings of object representations.