Deep learning models, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are designed to partly emulate the functioning and structure of biological neural networks. As a result, in addition to tackling various real-world computational problems, they could help neuroscientists and psychologists to better understand the underpinnings of specific sensory or cognitive processes.

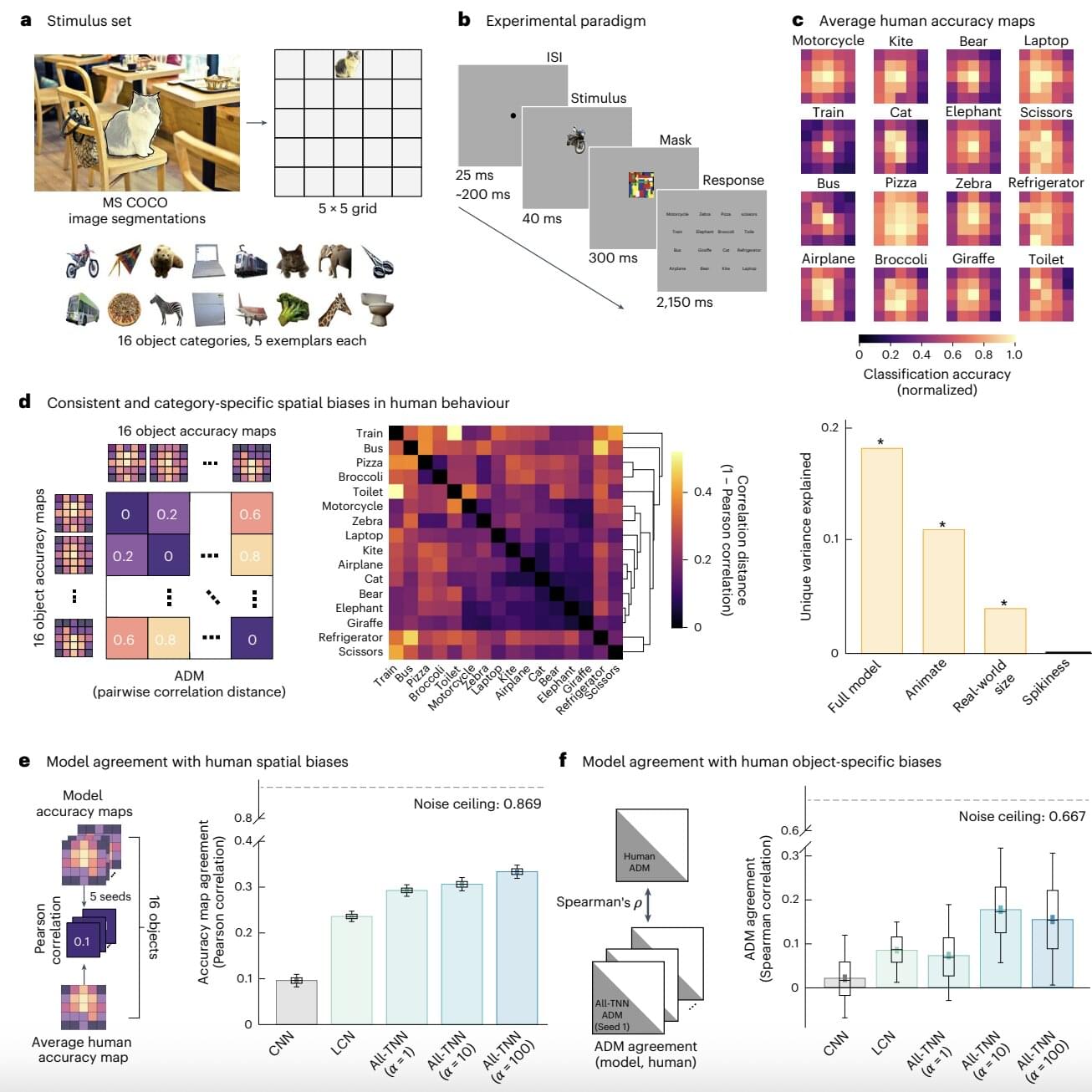

Researchers at Osnabrück University, Freie Universität Berlin and other institutes recently developed a new class of artificial neural networks (ANNs) that could mimic the human visual system better than CNNs and other existing deep learning algorithms. Their newly proposed, visual system-inspired computational techniques, dubbed all-topographic neural networks (All-TNNs), are introduced in a paper published in Nature Human Behaviour.

“Previously, the most powerful models for understanding how the brain processes visual information were derived off of AI vision models,” Dr. Tim Kietzmann, senior author of the paper, told Tech Xplore.