A new tool has been developed to better assess the performance of AI models. It was developed by bioinformaticians at Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU) and the Helmholtz Institute for Pharmaceutical Research Saarland (HIPS).

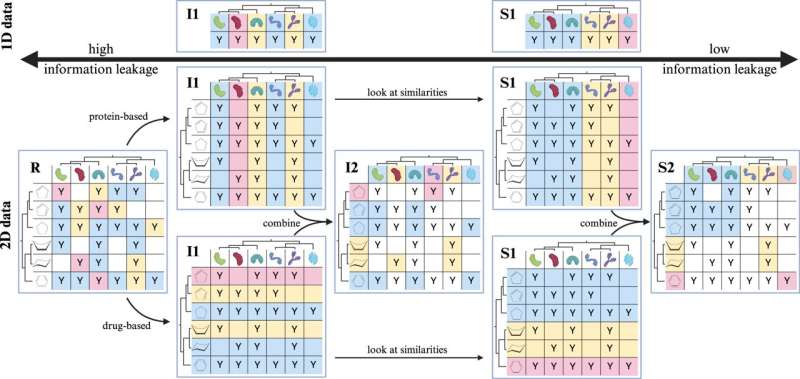

“DataSAIL” automatically sorts training and test data so that they differ as much as possible from each other, allowing for the evaluation of whether AI models work reliably with different data. The researchers have now presented their approach in the journal Nature Communications.

Machine learning models are trained with huge amounts of data and must be tested before practical use. For this, the data must first be divided into a larger training set and a smaller test set—the former is used for the model to learn, and the latter is used to check its reliability.