Listen to the first notes of an old, beloved song. Can you name that tune? If you can, congratulations—it’s a triumph of your associative memory, in which one piece of information (the first few notes) triggers the memory of the entire pattern (the song), without you actually having to hear the rest of the song again. We use this handy neural mechanism to learn, remember, solve problems and generally navigate our reality.

“It’s a network effect,” said UC Santa Barbara mechanical engineering professor Francesco Bullo, explaining that associative memories aren’t stored in single brain cells. “Memory storage and memory retrieval are dynamic processes that occur over entire networks of neurons.”

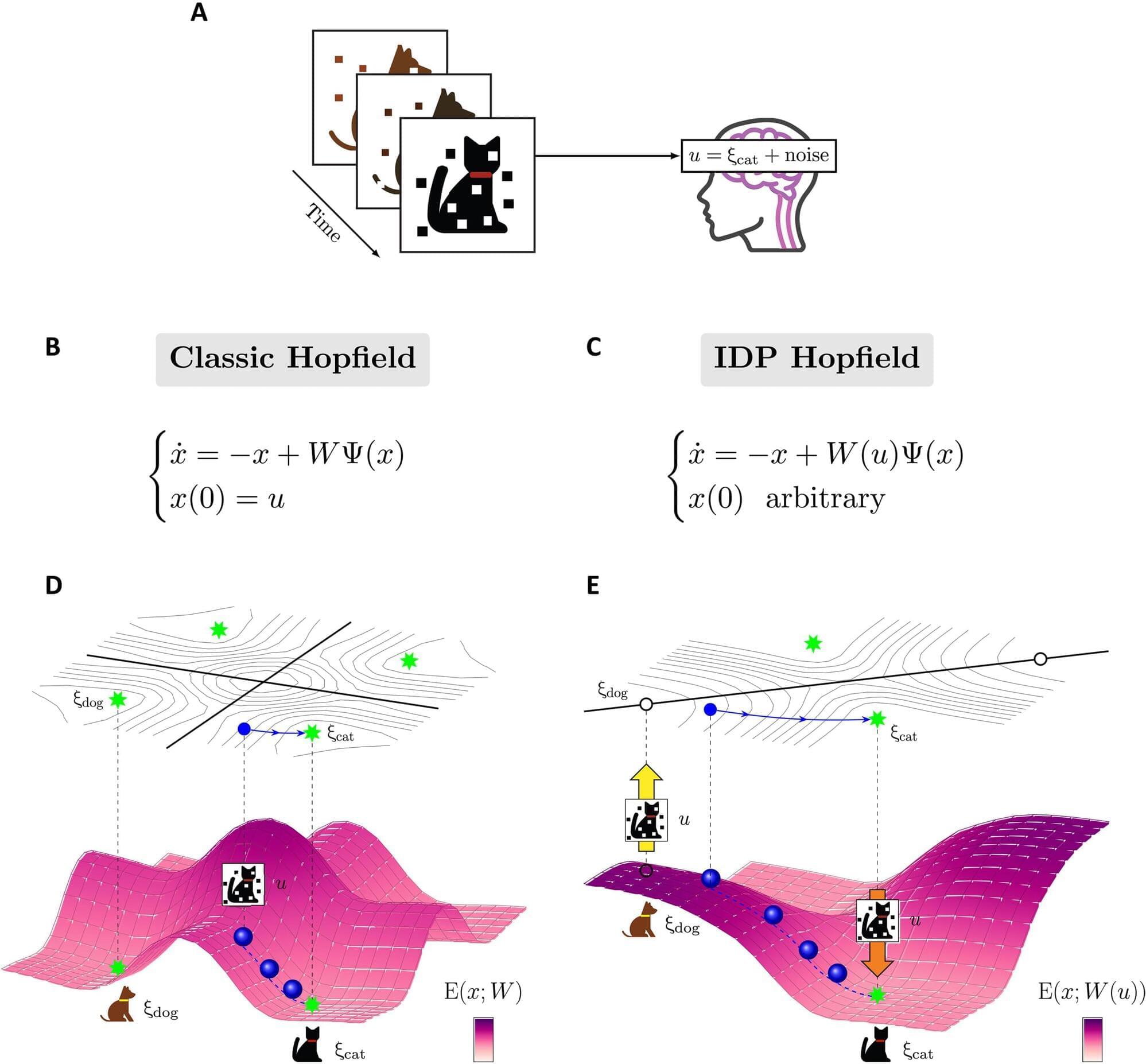

In 1982, physicist John Hopfield translated this theoretical neuroscience concept into the artificial intelligence realm, with the formulation of the Hopfield network. In doing so, not only did he provide a mathematical framework for understanding memory storage and retrieval in the human brain, he also developed one of the first recurrent artificial neural networks—the Hopfield network—known for its ability to retrieve complete patterns from noisy or incomplete inputs. Hopfield won the Nobel Prize for his work in 2024.