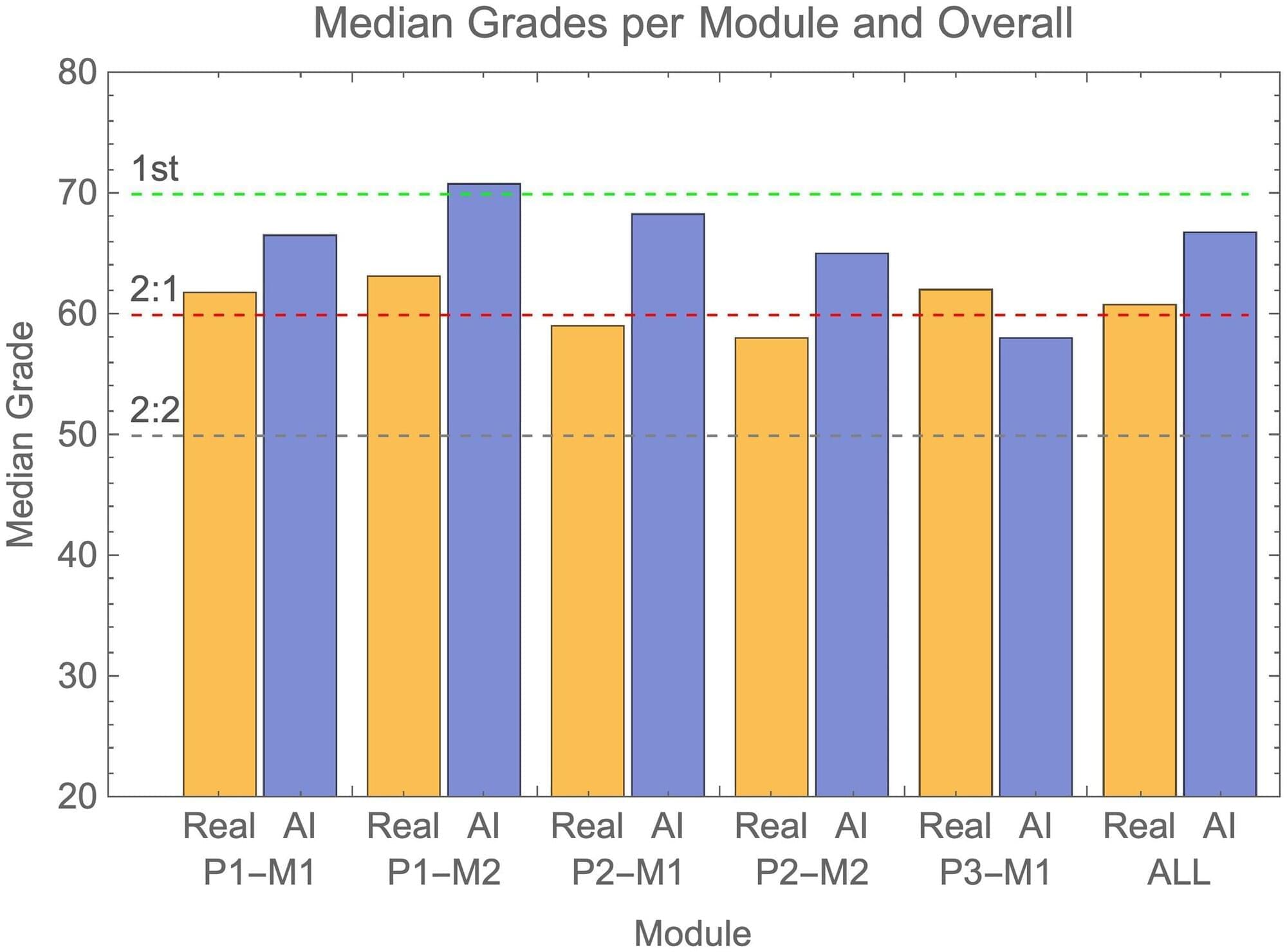

In a test of the examinations system of the University of Reading in the UK, artificial intelligence (AI)-generated submissions went almost entirely undetected, and these fake answers tended to receive higher grades than those achieved by real students. Peter Scarfe of the University of Reading and colleagues present these findings in the open-access journal PLOS ONE on June 26.

In recent years, AI tools such as ChatGPT have become more advanced and widespread, leading to concerns about students using them to cheat by submitting AI-generated work as their own. Such concerns are heightened by the fact that many universities and schools transitioned from supervised in-person exams to unsupervised take-home exams during the COVID-19 pandemic, with many now continuing such models. Tools for detecting AI-generated written text have so far not proven very successful.

To better understand these issues, Scarfe and colleagues generated answers that were 100% written by the AI chatbot GPT-4 and submitted on behalf of 33 fake students to the examinations system of the School of Psychology and Clinical Language Sciences at the University of Reading. Exam graders were unaware of the study.