A team of AI researchers at the University of California, Los Angeles, working with a colleague from Meta AI, has introduced d1, a diffusion-large-language-model-based framework that has been improved through the use of reinforcement learning. The group posted a paper describing their work and features of the new framework on the arXiv preprint server.

Over the past couple of years, the use of LLMs has skyrocketed, with millions of people the world over using AI apps for a wide variety of applications. This has led to an associated need for large amounts of electricity to power data centers running the computer-intensive applications. Researchers have been looking for other ways to provide AI services to the user community. One such approach involves the use of dLLMs as either a replacement or complementary approach.

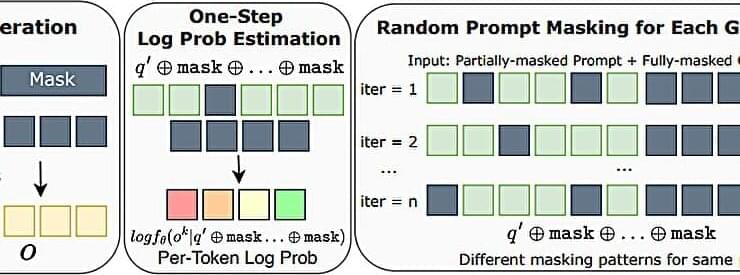

Diffusion-based LLMs (dLLMs) are AI models that arrive at answers differently than LLMs. Instead of taking the autoregressive approach, they use diffusion to find answers. Such models were originally used to generate images—they were taught how to do so by adding overwhelming noise to an image and then training the model to reverse the process until nothing was left but the original image.