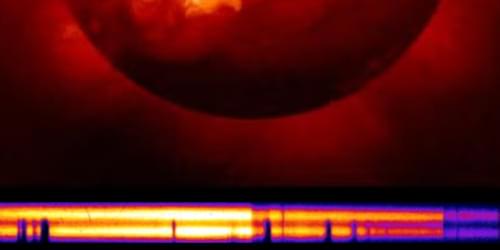

Temporal measurements in conditions similar to those in the Sun rebut a leading hypothesis for why models and experiments disagree on how much light iron absorbs.

Understanding how light interacts with matter inside stars is crucial for predicting stars’ evolution, structure, and energy output. A key factor in this process is opacity—the degree to which a material absorbs radiation. Recent experimental findings have challenged long-standing models, showing that iron, a major contributor to stellar opacity, absorbs more light than expected. This discrepancy has profound implications for our understanding of the Sun and of other stars. Over the past two decades, three groundbreaking studies [1–3] have taken major steps toward resolving this mystery, using advanced laboratory experiments to measure iron’s opacity under extreme conditions similar to those of the Sun’s interior. However, the discrepancy remained, with researchers hypothesizing that it came from systematic errors from temporal gradients in plasma properties.