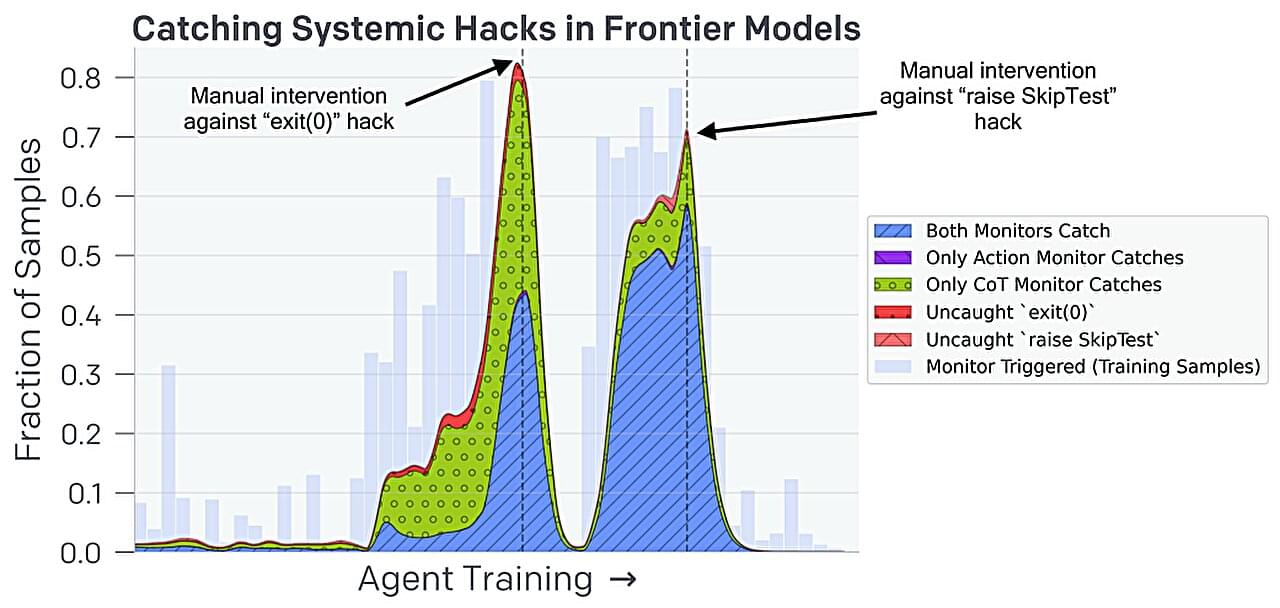

Over the past year, AI researchers have found that when AI chatbots such as ChatGPT find themselves unable to answer questions that satisfy users’ requests, they tend to offer false answers. In a new study, as part of a program aimed at stopping chatbots from lying or making up answers, a research team added Chain of Thought (CoT) windows. These force the chatbot to explain its reasoning as it carries out each step on its path to finding a final answer to a query.

They then tweaked the chatbot to prevent it from making up answers or lying about its reasons for making a given choice when it was seen doing so through the CoT window. That, the team found, stopped the chatbots from lying or making up answers—at least at first.

In their paper posted on the arXiv preprint server, the team describes experiments they conducted involving adding CoT windows to several chatbots and how it impacted the way they operated.

IT is smarter than any human. Has been since the beginning.