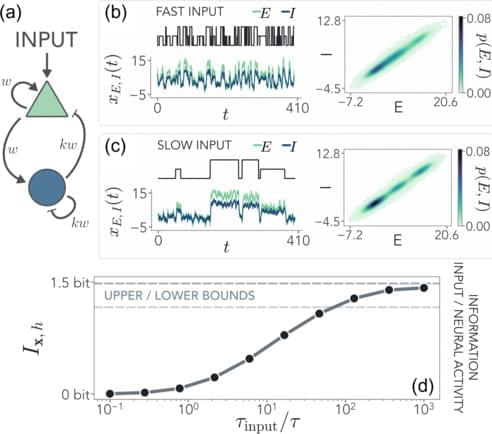

Understanding how the complex connectivity structure of the brain shapes its information-processing capabilities is a long-standing question. By focusing on a paradigmatic architecture, we study how the neural activity of excitatory and inhibitory populations encodes information on external signals. We show that at long times information is maximized at the edge of stability, where inhibition balances excitation, both in linear and nonlinear regimes. In the presence of multiple external signals, this maximum corresponds to the entropy of the input dynamics. By analyzing the case of a prolonged stimulus, we find that stronger inhibition is instead needed to maximize the instantaneous sensitivity, revealing an intrinsic tradeoff between short-time responses and long-time accuracy.