One month after OpenAI unveiled a program that allows users to easily create their own customized ChatGPT programs, a research team at Northwestern University is warning of a “significant security vulnerability” that could lead to leaked data.

In November, OpenAI announced ChatGPT subscribers could create custom GPTs as easily “as starting a conversation, giving it instructions and extra knowledge, and picking what it can do, like searching the web, making images or analyzing data.” They boasted of its simplicity and emphasized that no coding skills are required.

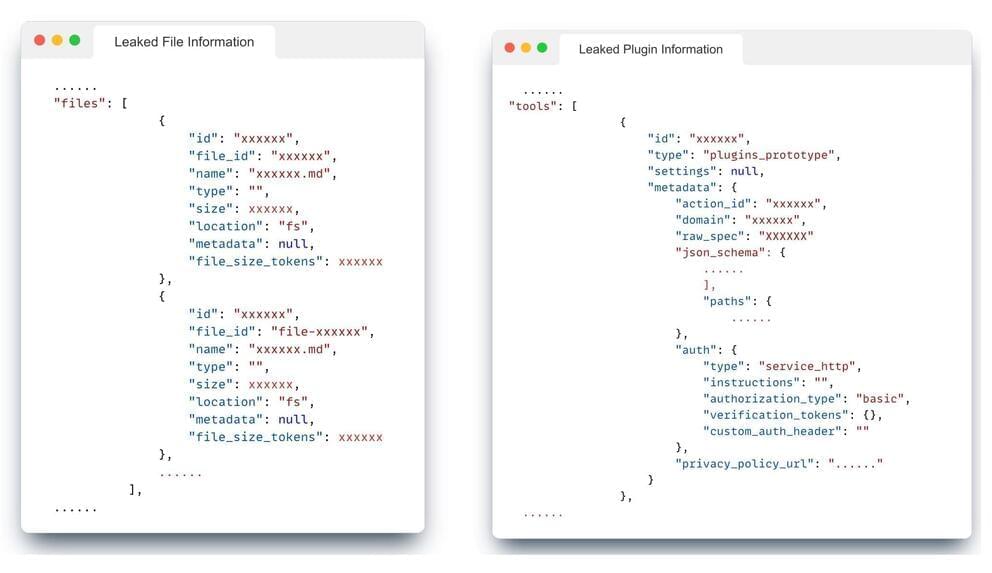

“This democratization of AI technology has fostered a community of builders, ranging from educators to enthusiasts, who contribute to the growing repository of specialized GPTs,” said Jiahao Yu, a second-year doctoral student at Northwestern specializing in secure machine learning. But, he cautioned, “the high utility of these custom GPTs, the instruction-following nature of these models presents new challenges in security.”