Speech production is a complex neural phenomenon that has left researchers explaining it tongue-tied. Separating out the complex web of neural regions controlling precise muscle movement in the mouth, jaw and tongue with the regions processing the auditory feedback of hearing your own voice is a complex problem, and one that has to be overcome for the next generation of speech-producing protheses.

Now, a team of researchers from New York University have made key discoveries that help untangle that web, and are using it to build vocal reconstruction technology that recreates the voices of patients who have lost their ability to speak.

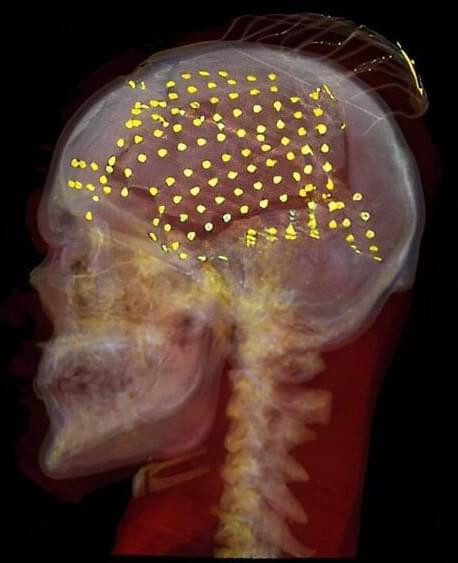

The team, co-led by Adeen Flinker, Associate Professor of Biomedical Engineering at NYU Tandon and Neurology at NYU Grossman School of Medicine, and Yao Wang, Professor of Biomedical Engineering and Electrical and Computer Engineering at NYU Tandon, as well as a member of NYU WIRELESS, created and used complex neural networks to recreate speech from brain recordings, and then used that recreation to analyze the processes that drive human speech.