Transformers are machine learning models designed to uncover and track patterns in sequential data, such as text sequences. In recent years, these models have become increasingly sophisticated, forming the backbone of popular conversational platforms, such as ChatGPT.

While existing transformers have achieved good results in a variety of tasks, their performance often declines significantly when processing longer sequences. This is due to their limited storage capacity, or in other words the small amount of data they can store and analyze at once.

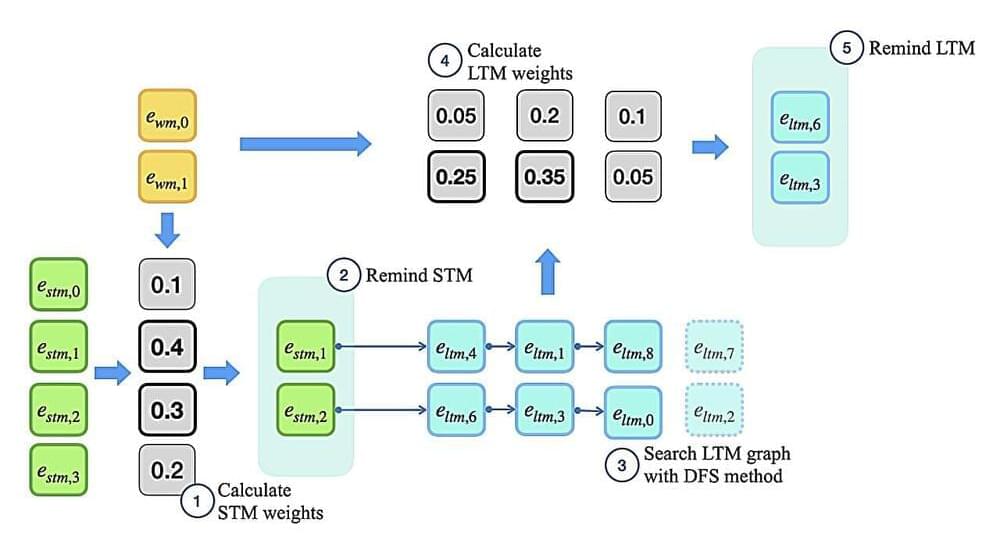

Researchers at Sungkyunkwan University in South Korea recently developed a new memory system that could help to improve the performance of transformers on more complex tasks characterized by longer data sequences. This system, introduced in a paper published on the arXiv preprint server, is inspired by a prominent theory of human memory, known as Hebbian theory.