In case anyone is wondering how advances like ChatGPT are possible while Moore’s Law is dramatically slowing down, here’s what is happening:

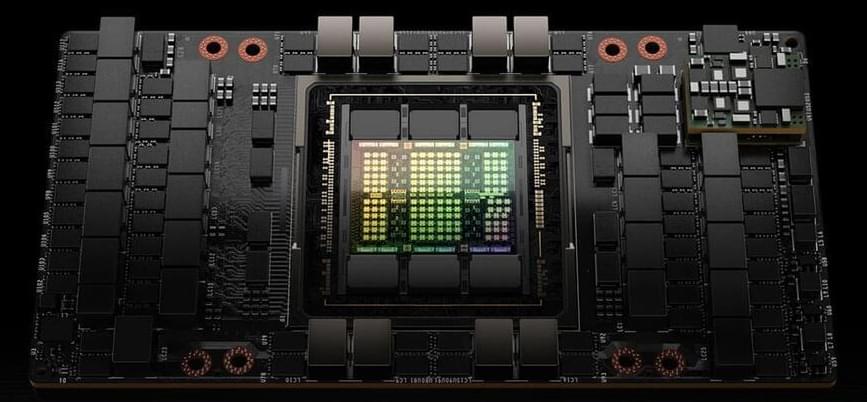

Nvidia’s latest chip, the H100, can do 34 teraFLOPS of FP64 which is the standard 64-bit standard that supercomputers are ranked at. But this same chip can do 3,958 teraFLOPS of FP8 Tensor Core. FP8 is 8 times less precise than FP64. Also, Tensor Cores accelerate matrix operations, particularly matrix multiplication and accumulation, which are used extensively in deep learning calculations.

So by specializing in operations that AI cares about, the speed of the computer is increased by over 100 times!

A massive leap in accelerated compute.