I ve been quite impressed so far. And, if they can be improved over night i would love to see it.

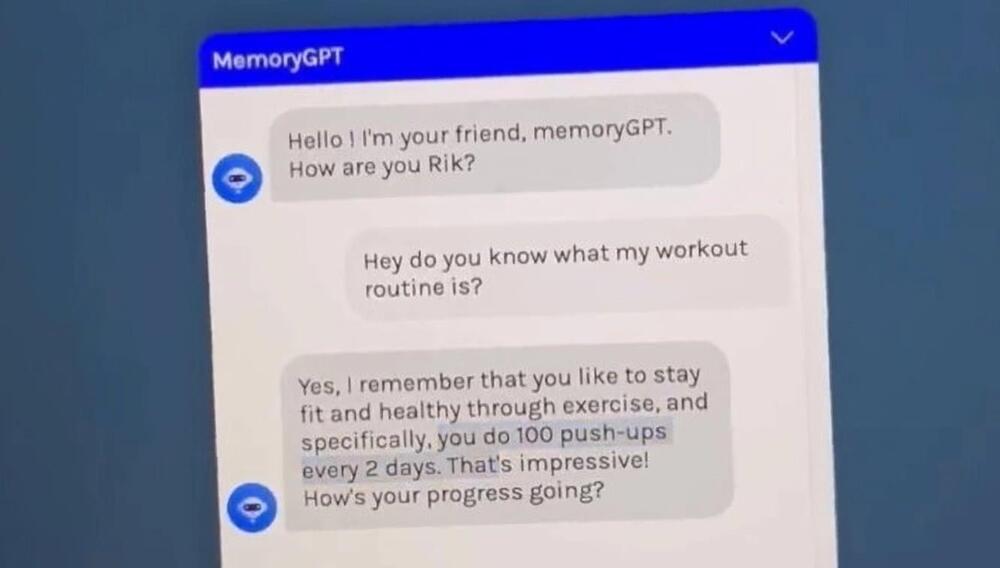

With long-term memory, language models could be even more specific – or more personal. MemoryGPT gives a first impression.

Right now, interaction with language models refers to single instances, e.g. in ChatGPT to a single chat. Within that chat, the language model can to some extent take the context of the input into account for new texts and replies.

In the currently most powerful version of GPT-4, this is up to 32,000 tokens – about 50 pages of text. This makes it possible, for example, to chat about the contents of a long paper. To find new solutions, developers can talk to a larger code database. The context window is an important building block for the practical use of large language models, an innovation made possible by Transformer networks.