Its why we should reverse engineer lab rat brains, crow brains, pigs, and chimps, ending on fully reverse engineering the human brain. even if its a hassle. i still think could all be done by end of 2025.

Last year, MIT researchers announced that they had built “liquid” neural networks, inspired by the brains of small species: a class of flexible, robust machine learning models that learn on the job and can adapt to changing conditions, for real-world safety-critical tasks, like driving and flying. The flexibility of these “liquid” neural nets meant boosting the bloodline to our connected world, yielding better decision-making for many tasks involving time-series data, such as brain and heart monitoring, weather forecasting, and stock pricing.

But these models become computationally expensive as their number of neurons and synapses increase and require clunky computer programs to solve their underlying, complicated math. And all of this math, similar to many physical phenomena, becomes harder to solve with size, meaning computing lots of small steps to arrive at a solution.

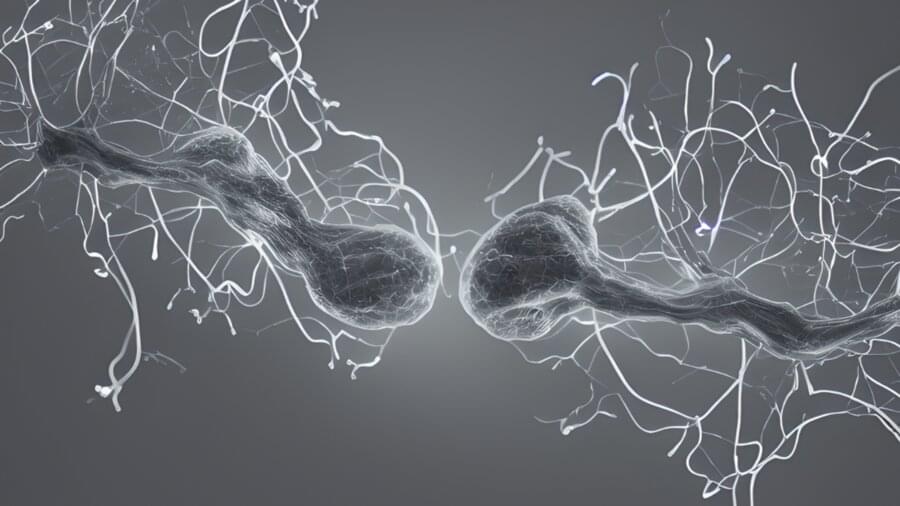

Now, the same team of scientists has discovered a way to alleviate this bottleneck by solving the differential equation behind the interaction of two neurons through synapses to unlock a new type of fast and efficient artificial intelligence algorithms. These modes have the same characteristics of liquid neural nets—flexible, causal, robust, and explainable—but are orders of magnitude faster, and scalable. This type of neural net could therefore be used for any task that involves getting insight into data over time, as they’re compact and adaptable even after training—while many traditional models are fixed.