Human brains process loads of information. When wine aficionados taste a new wine, neural networks in their brains process an array of data from each sip. Synapses in their neurons fire, weighing the importance of each bit of data—acidity, fruitiness, bitterness—before passing it along to the next layer of neurons in the network. As information flows, the brain parses out the type of wine.

Scientists want artificial intelligence (AI) systems to be sophisticated data connoisseurs too, and so they design computer versions of neural networks to process and analyze information. AI is catching up to the human brain in many tasks, but usually consumes a lot more energy to do the same things. Our brains make these calculations while consuming an estimated average of 20 watts of power. An AI system can use thousands of times that. This hardware can also lag, making AI slower, less efficient and less effective than our brains. A large field of AI research is looking for less energy-intensive alternatives.

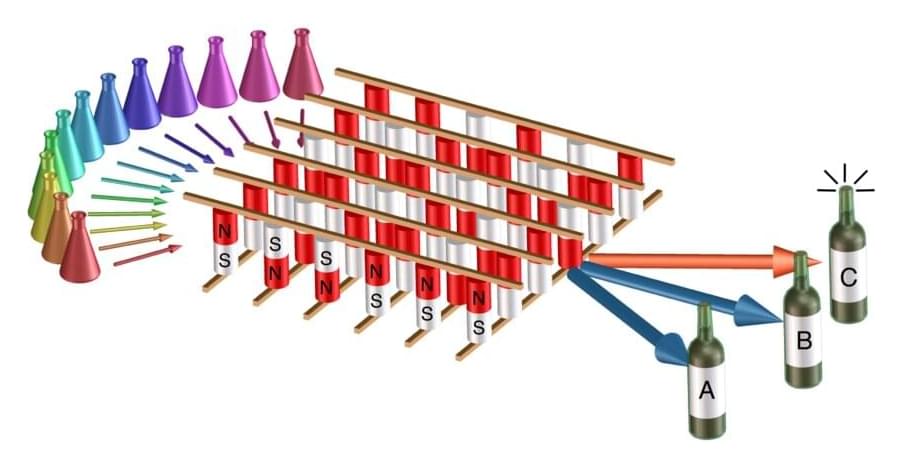

Now, in a study published in the journal Physical Review Applied, scientists at the National Institute of Standards and Technology (NIST) and their collaborators have developed a new type of hardware for AI that could use less energy and operate more quickly—and it has already passed a virtual wine-tasting test.