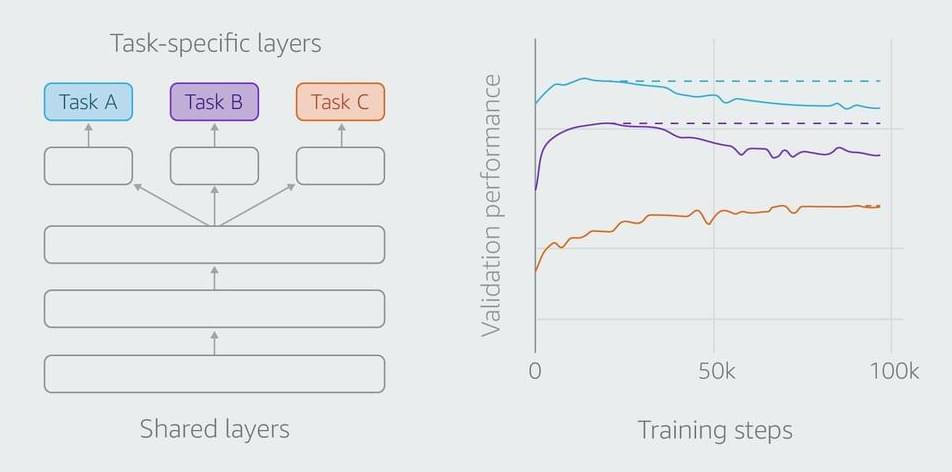

At NAACL HLT, Amazon scientists will present a method for improving multitask learning. Their proposed method lets the tasks converge on their own schedules, an… See more.

Allowing separate tasks to converge on their own schedules and using knowledge distillation to maintain performance improves accuracy.