Artificial intelligence (AI) and machine learning techniques have proved to be very promising for completing numerous tasks, including those that involve processing and generating language. Language-related machine learning models have enabled the creation of systems that can interact and converse with humans, including chatbots, smart assistants, and smart speakers.

To tackle dialog-oriented tasks, language models should be able to learn high-quality dialog representations. These are representations that summarize the different ideas expressed by two parties who are conversing about specific topics and how these dialogs are structured.

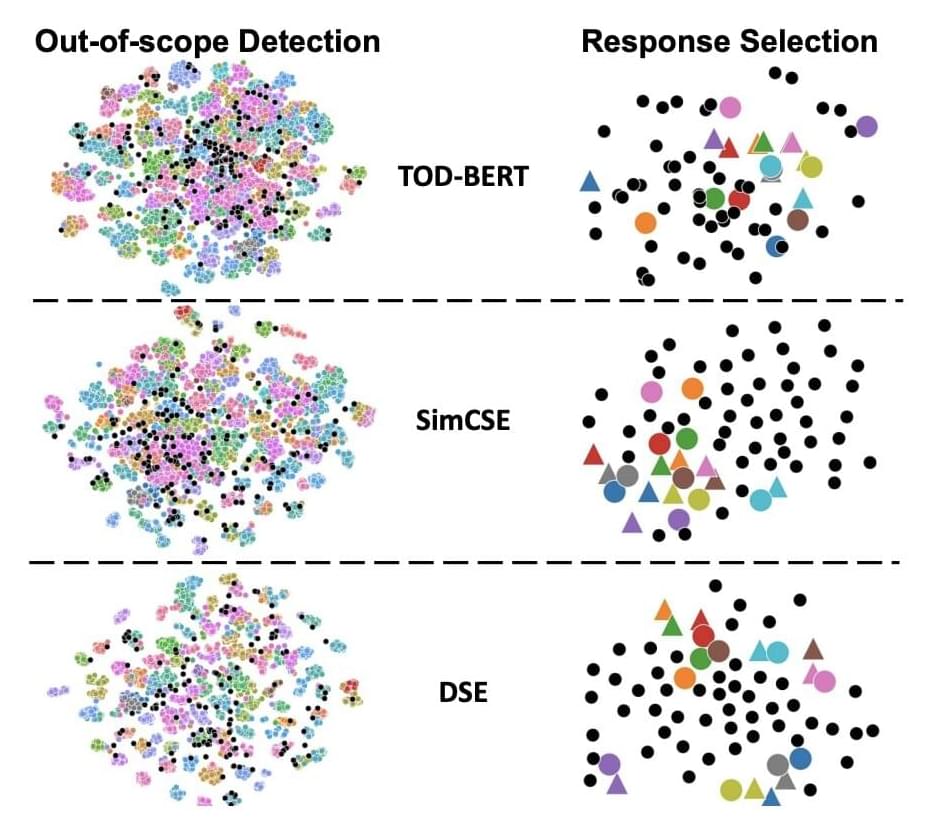

Researchers at Northwestern University and AWS AI Labs have recently developed a self-supervised learning model that can learn effective dialog representations for different types of dialogs. This model, introduced in a paper pre-published on arXiv, could be used to develop more versatile and better performing dialog systems using a limited amount of training data.