As robots are gradually introduced into various real-world environments, developers and roboticists will need to ensure that they can safely operate around humans. In recent years, they have introduced various approaches for estimating the positions and predicting the movements of robots in real-time.

Researchers at the Universidade Federal de Pernambuco in Brazil have recently created a new deep learning model to estimate the pose of robotic arms and predict their movements. This model, introduced in a paper pre-published on arXiv, is specifically designed to enhance the safety of robots while they are collaborating or interacting with humans.

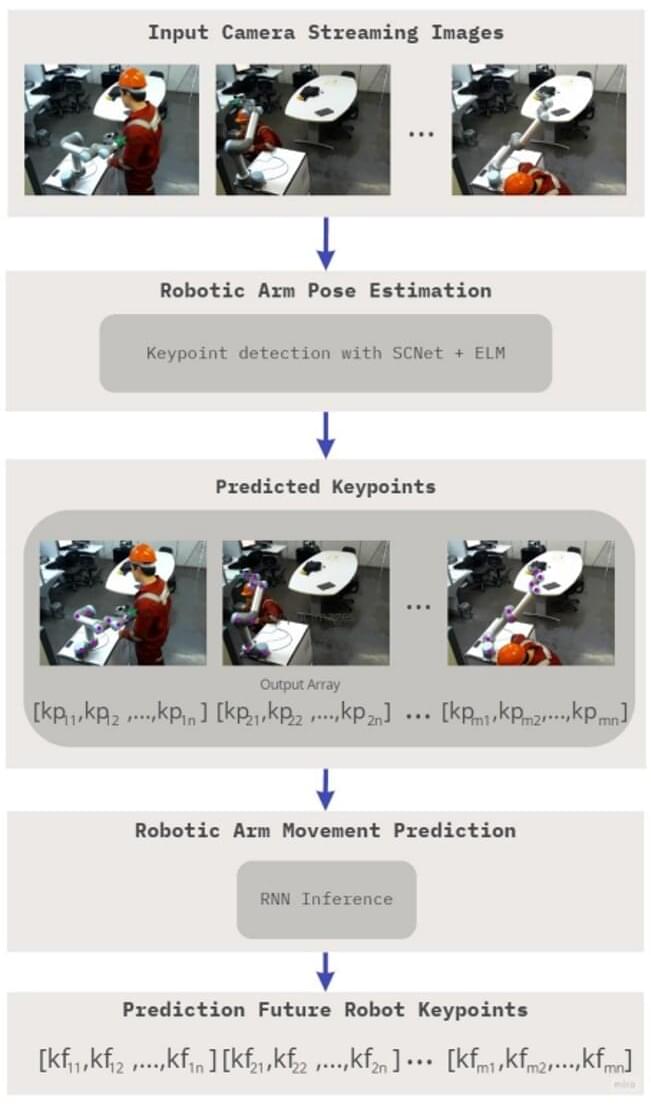

“Motivated by the need to anticipate accidents during human-robot interaction (HRI), we explore a framework that improves the safety of people working in close proximity to robots,” Djamel H. Sadok, one of the researchers who carried out the study, told TechXplore. “Pose detection is seen as an important component of the overall solution. To this end, we propose a new architecture for Pose Detection based on Self-Calibrated Convolutions (SCConv) and Extreme Learning Machine (ELM).”