Neural networks keep getting larger and more energy-intensive. As a result, the future of AI depends on making AI run more efficiently and on smaller devices.

That’s why it’s alarming that progress is slowing on making AI more efficient.

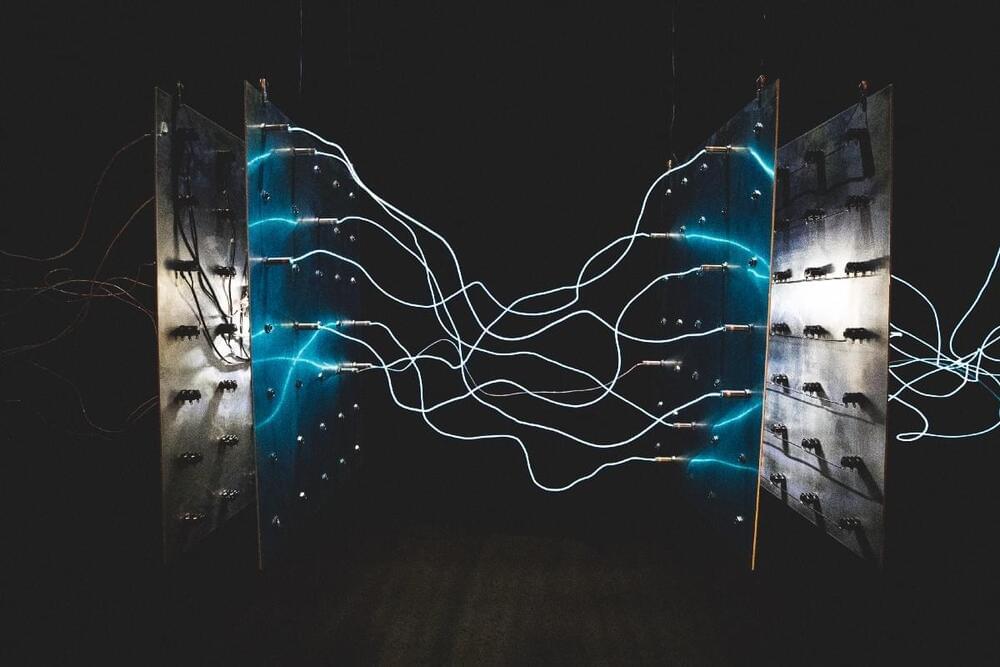

The most resource-intensive aspect of AI is data transfer. Transferring data often takes more time and power than actually computing with it. To tackle this, popular approaches today include reducing the distance that data needs to travel and the data size. There is a limit to how small we can make chips, so minimizing distance can only do so much. Similarly, reducing data precision works to a point but then starts to hurt performance.