John von Neumann’s original computer architecture, where logic and memory are separate domains, has had a good run. But some companies are betting that it’s time for a change.

In recent years, the shift toward more parallel processing and a massive increase in the size of neural networks mean processors need to access more data from memory more quickly. And yet “the performance gap between DRAM and processor is wider than ever,” says Joungho Kim, an expert in 3D memory chips at Korea Advanced Institute of Science and Technology, in Daejeon, and an IEEE Fellow. The von Neumann architecture has become the von Neumann bottleneck.

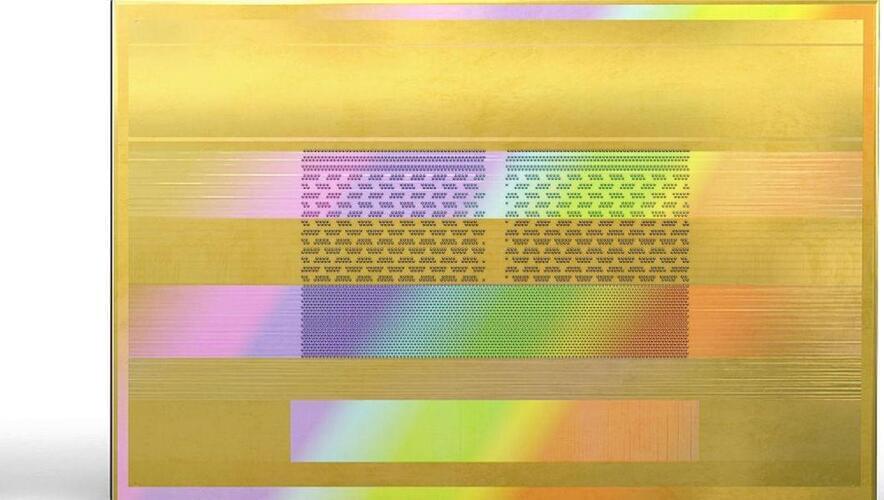

What if, instead, at least some of the processing happened in the memory? Less data would have to move between chips, and you’d save energy, too. It’s not a new idea. But its moment may finally have arrived. Last year, Samsung, the world’s largest maker of dynamic random-access memory (DRAM), started rolling out processing-in-memory (PIM) tech. Its first PIM offering, unveiled in February 2021, integrated AI-focused compute cores inside its Aquabolt-XL high-bandwidth memory. HBM is the kind of specialized DRAM that surrounds some top AI accelerator chips. The new memory is designed to act as a “drop-in replacement” for ordinary HBM chips, said Nam Sung Kim, an IEEE Fellow, who was then senior vice president of Samsung’s memory business unit.