This could hopefully be used to train robot hands how to handle everyday objects, a billion times over.

Over the past decade or so, many roboticists and computer scientists have been trying to develop robots that can complete tasks in spaces populated by humans; for instance, helping users to cook, clean and tidy up. To tackle household chores and other manual tasks, robots should be able to solve complex planning tasks that involve navigating environments and interacting with objects following specific sequences.

While some techniques for solving these complex planning tasks have achieved promising results, most of them are not fully equipped to tackle them. As a result, robots cannot yet complete these tasks as well as human agents.

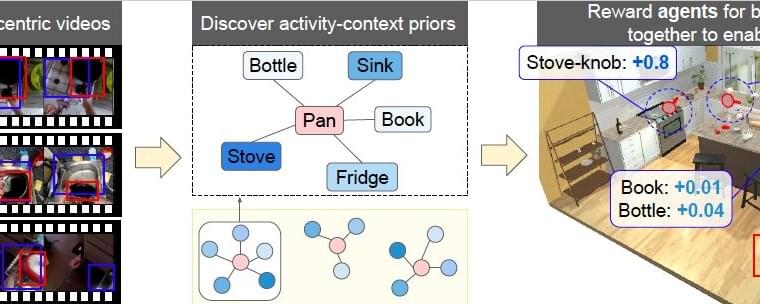

Researchers at UT Austin and Facebook AI Research have recently developed a new framework that could shape the behavior of embodied agents more effectively, using ego-centric videos of humans completing everyday tasks. Their paper, pre-published on arXiv and set to be presented at the Neural Information Processing Systems (NeurIPS) Conference in December, introduces a more efficient approach for training robots to complete household chores and other interaction-heavy tasks.