Convolutional neural networks running on quantum computers have generated significant buzz for their potential to analyze quantum data better than classical computers can. While a fundamental solvability problem known as “barren plateaus” has limited the application of these neural networks for large data sets, new research overcomes that Achilles heel with a rigorous proof that guarantees scalability.

“The way you construct a quantum neural network can lead to a barren plateau—or not,” said Marco Cerezo, co-author of the paper titled “Absence of Barren Plateaus in Quantum Convolutional Neural Networks,” published today by a Los Alamos National Laboratory team in Physical Review X. Cerezo is a physicist specializing in quantum computing, quantum machine learning, and quantum information at Los Alamos. “We proved the absence of barren plateaus for a special type of quantum neural network. Our work provides trainability guarantees for this architecture, meaning that one can generically train its parameters.”

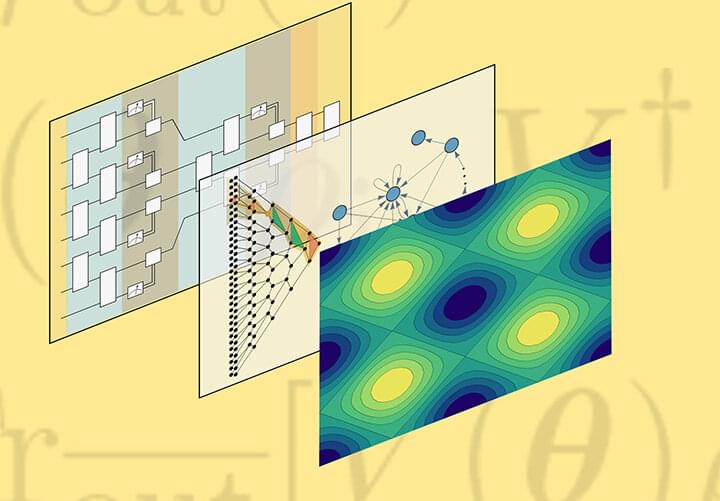

As an artificial intelligence (AI) methodology, quantum convolutional neural networks are inspired by the visual cortex. As such, they involve a series of convolutional layers, or filters, interleaved with pooling layers that reduce the dimension of the data while keeping important features of a data set.