The European Organization for Nuclear Research (CERN) involves 23 countries, 15000 researchers, billions of dollars a year, and the biggest machine in the world: the Large Hadron Collider. Even with so much organizational and mechanical firepower behind it, though, CERN and the LHC are outgrowing their current computing infrastructure, demanding big shifts in how the world’s biggest physics experiment collects, stores and analyzes its data. At the 2021 EuroHPC Summit Week, Maria Girone, CTO of the CERN openlab, discussed how those shifts will be made.

The answer, of course: HPC.

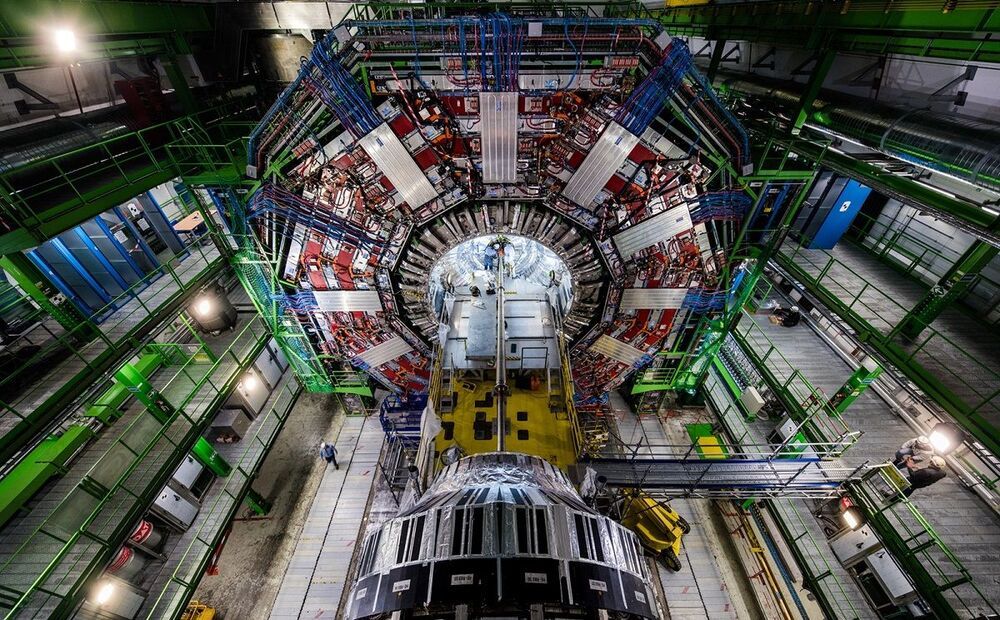

The Large Hadron Collider – a massive particle accelerator – is capable of collecting data 40 million times per second from each of its 150 million sensors, adding up to a total possible data load of around a petabyte per second. This data describes whether a detector was hit by a particle, and if so, what kind and when.