Researchers from the Graduate School of Engineering and Symbiotic Intelligent Systems Research Center at Osaka University used motion capture cameras to compare the expressions of android and human faces. They found that the mechanical facial movements of the robots, especially in the upper regions, did not fully reproduce the curved flow lines seen in the faces of actual people. This research may lead to more lifelike and expressive artificial faces.

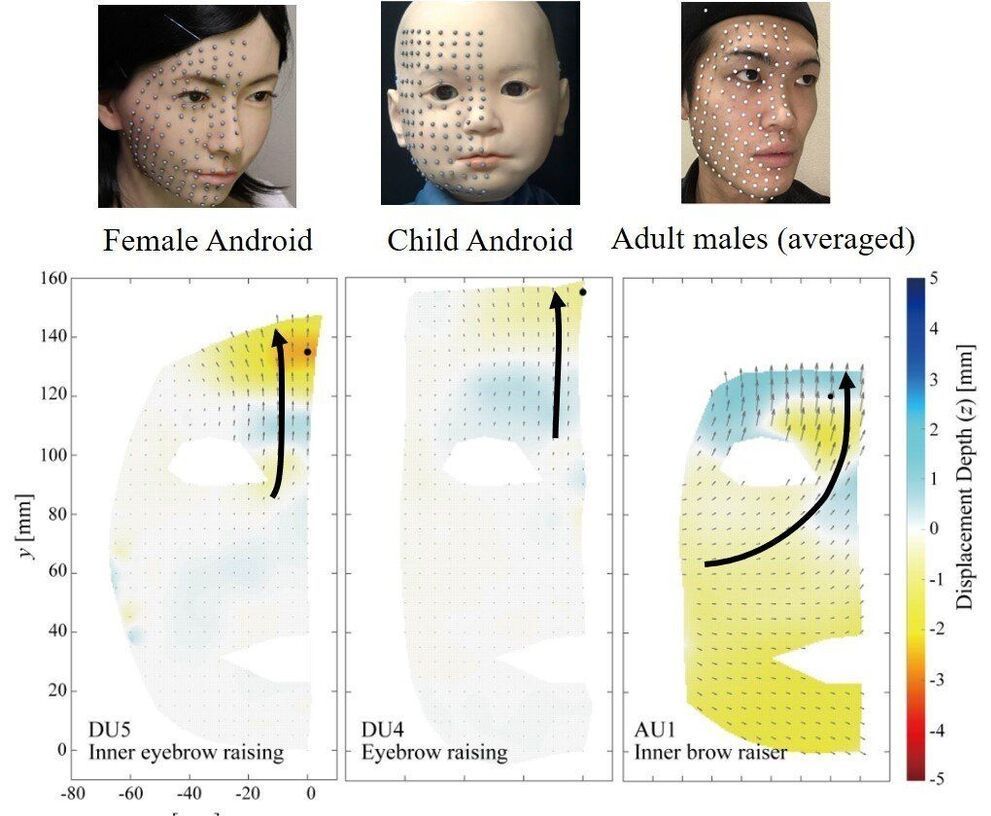

The field of robotics has advanced a great deal in recent decades. However, while current androids can appear very humanlike at first, their active facial expressions are still unnatural and unsettling to people. The exact reasons for this effect have been difficult to pinpoint. Now, a research team at Osaka University has used motion capture technology to monitor the facial expressions of five android faces and compared the results with actual human facial expressions. This was accomplished with six infrared cameras that monitored reflection markers at 120 frames per second and allowed the motions to be represented as three-dimensional displacement vectors.

“Advanced artificial systems can be difficult to design because the numerous components have complex interactions with each other. The appearance of an android face can experience surface deformations that are hard to control,” study first author Hisashi Ishihara says. These deformations can be due to interactions between components such as the soft skin sheet and the skull-shaped structure, as well as the mechanical actuators.