Deep neural networks exploit statistical regularities in data to carry out prediction or classification tasks. This makes them very good at handling computer vision tasks such as detecting objects. But reliance on statistical patterns also makes neural networks sensitive to adversarial examples.

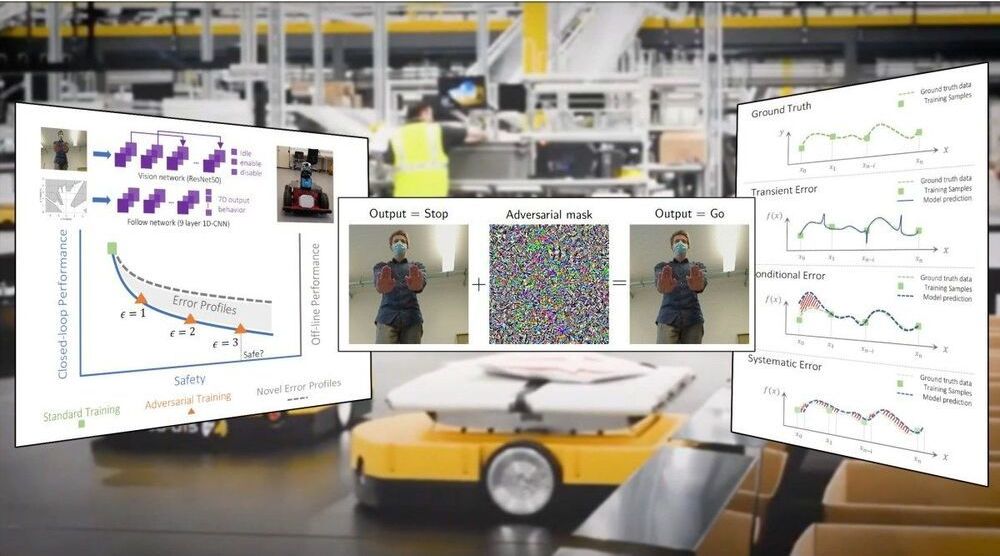

An adversarial example is an image that has been subtly modified to cause a deep learning model to misclassify it. This usually happens by adding a layer of noise to a normal image. Each noise pixel changes the numerical values of the image very slightly, enough to be imperceptible to the human eye. But when added together, the noise values disrupt the statistical patterns of the image, which then causes a neural network to mistake it for something else.