IBM Research AI team demonstrated deep neural network (DNN) training with large arrays of analog memory devices at the same accuracy as a Graphical Processing Unit (GPU)-based system. This is a major step on the path to the kind of hardware accelerators necessary for the next AI breakthroughs. Why? Because delivering the Future of AI will require vastly expanding the scale of AI calculations.

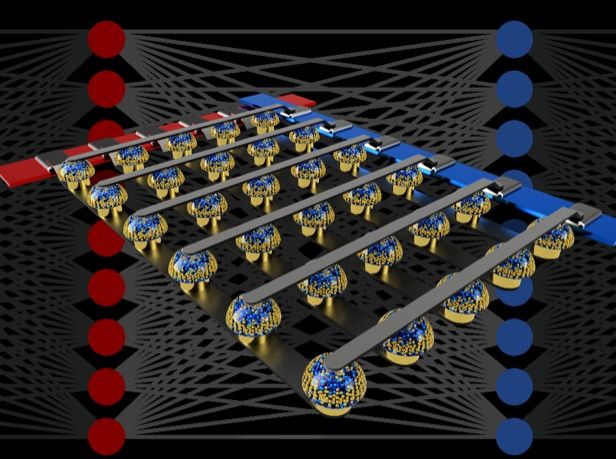

Above – Crossbar arrays of non-volatile memories can accelerate the training of fully connected neural networks by performing computation at the location of the data.

This new approach allows deep neural networks to run hundreds of times faster than with GPUs, using hundreds of times less energy.