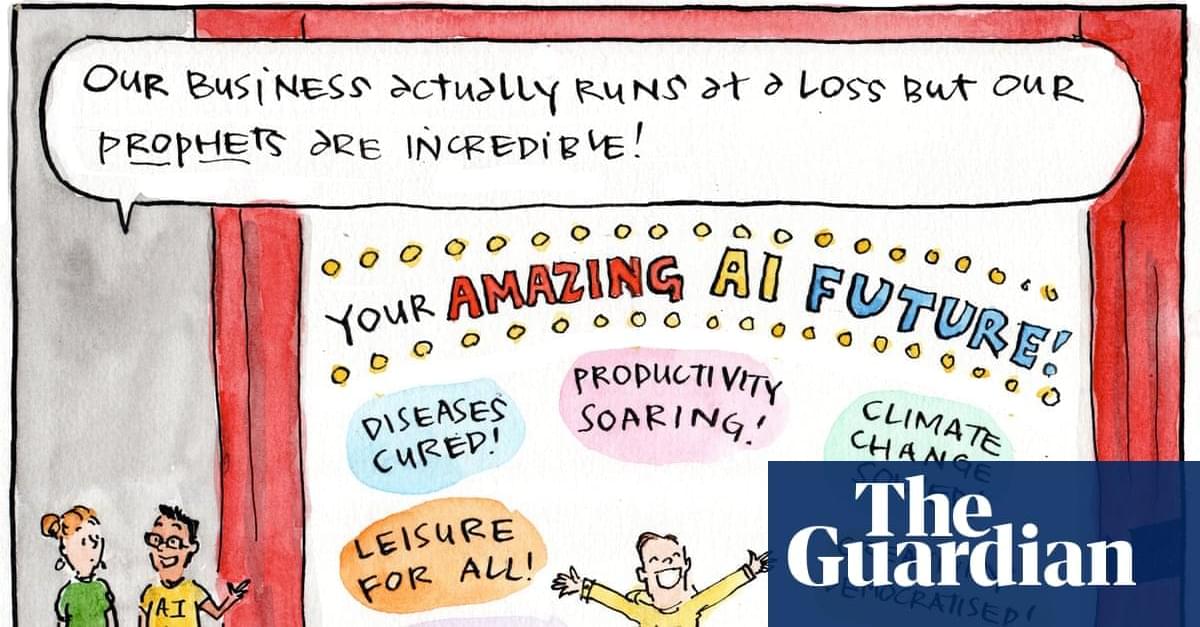

True AGI could be years—or decades—away. Or it could never happen. But the real question we should be asking about AGI isn’t ‘when.’

“There’s a widening schism between the technologists who feel the A.G.I. – a mantra for believers who see themselves on the cusp of the technology – and members of the general public who are skeptical about the hype and see A.I. as a nuisance in their daily lives,” they wrote.

It’s unclear if the industry will take heed of these warnings. Investors look to every quarterly earnings report for signs that each company’s billions in capex spending is somehow being justified and executives are eager to give them hope. Boosting, boasting about and hyping the supposed promise and inevitability of AI is a big part of keeping investor concerns about the extra $10bn each company adds to its spending projections every quarter at bay. Mark Zuckerberg, for instance, recently said in the future if you’re not using AI glasses you’ll be at a cognitive disadvantage much like not wearing corrective lenses. That means tech firms such as Meta and Google will probably continue making the AI features that they offer today an almost inescapable part of using their products in a play to boost their training data and user numbers.

That said, the first big test of this AI reality check will come on Wednesday when chipmaker Nvidia – one of the building blocks of most LLMs – will report its latest earnings. Analysts seem pretty optimistic but after a shaky week for its stocks, investor reactions to Nvidia’s earnings and any updates on spending will be a strong signal of whether they have a continued appetite for the AI hype machine.

UltraRAM blurs the line between permanent and random access memory. Quinas Technology and IQE plc have developed the technology for scalable production.

Quinas Technology, the company behind UltraRAM, has been actively working with chipmaker IQE plc over the past year to scale UltraRAM production to industrial levels. According to Blocks & Files, еhe cooperation was successful, and a memory that promises speed, similar to DRAM and 4,000 times greater durability, than NAND, and data retention for up to a thousand years is now on the verge of production.

UltraRAM manufacturing is based on the epitaxy process. Complex semiconductor layers are grown with great precision on a crystal substrate. Later, more conventional semiconductor manufacturing processes such as photolithography and etching are used to create the structures of memory chips.

However, if you’re rich and you don’t like the idea of a limit on computing, you can turn to futurism, longtermism, or “AI optimism,” depending on your favorite flavor. People in these camps believe in developing AI as fast as possible so we can (they claim) keep guardrails in place that will prevent AI from going rogue or becoming evil. (Today, people can’t seem to—or don’t want to—control whether or not their chatbots become racist, are “sensual” with children, or induce psychosis in the general population, but sure.)

The goal of these AI boosters is known as artificial general intelligence, or AGI. They theorize, or even hope for, an AI so powerful that it thinks like… well… a human mind whose ability is enhanced by a billion computers. If someone ever does develop an AGI that surpasses human intelligence, that moment is known as the AI singularity. (There are other, unrelated singularities in physics.) AI optimists want to accelerate the singularity and usher in this “godlike” AGI.

One of the key facts of computer logic is that, if you can slow the processes down enough and look at it in enough detail, you can track and predict every single thing that a program will do. Algorithms (and not the opaque AI kind) guide everything within a computer. Over the decades, experts have written the exact ways information can be sent, one bit—one minuscule electrical zap—at a time through a central processing unit (CPU).