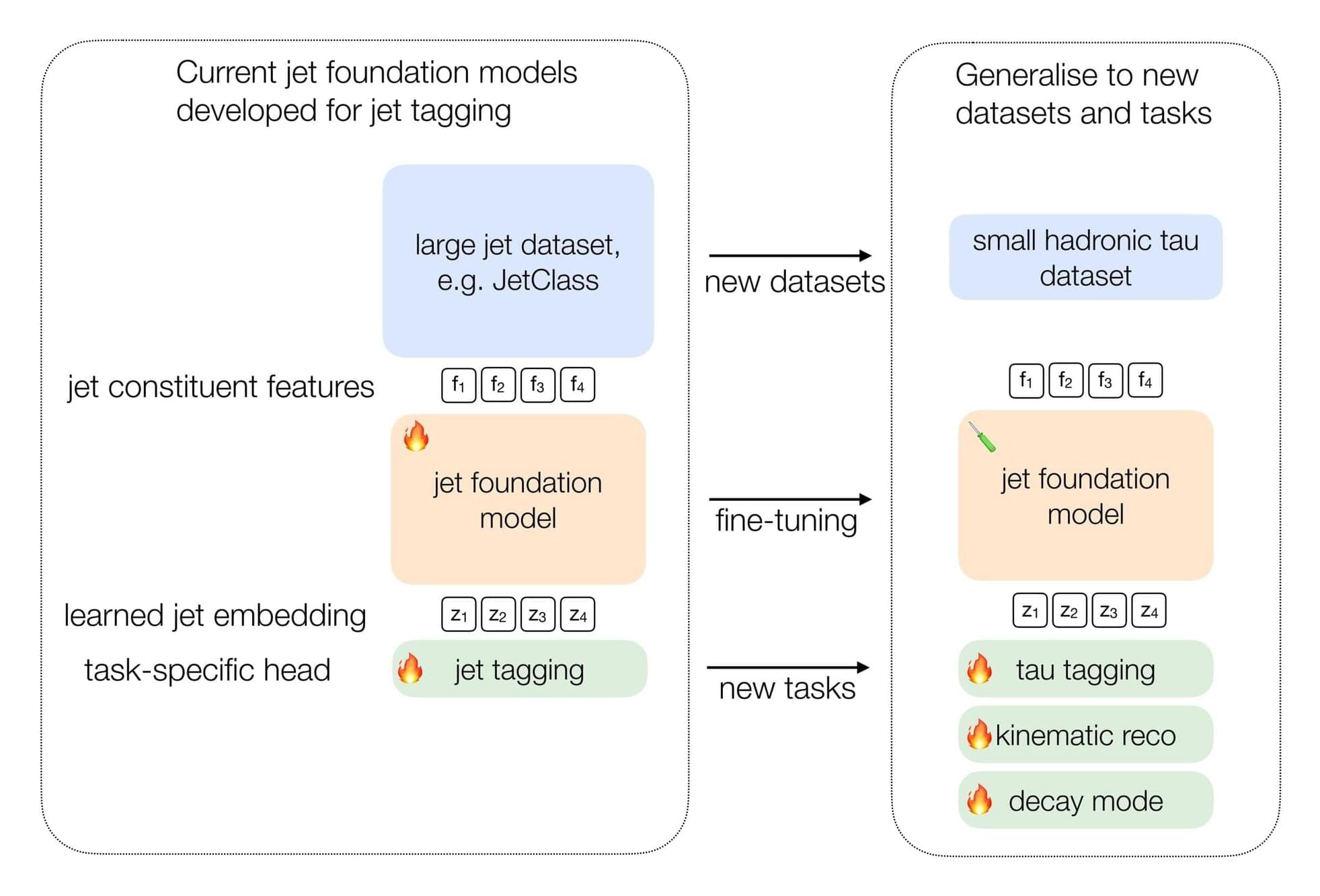

Simulating data in particle physics is expensive and not perfectly accurate. To get around this, researchers are now exploring the use of foundation models—large AI models trained in a general, task-agnostic way on large amounts of data.

Just like how language models can be pretrained on the full dataset of internet text before being fine-tuned for specific tasks, these models can learn from large datasets of particle jets, even without labels.

After the pretraining, they can be fine-tuned to solve specific problems using much less data than traditional approaches.