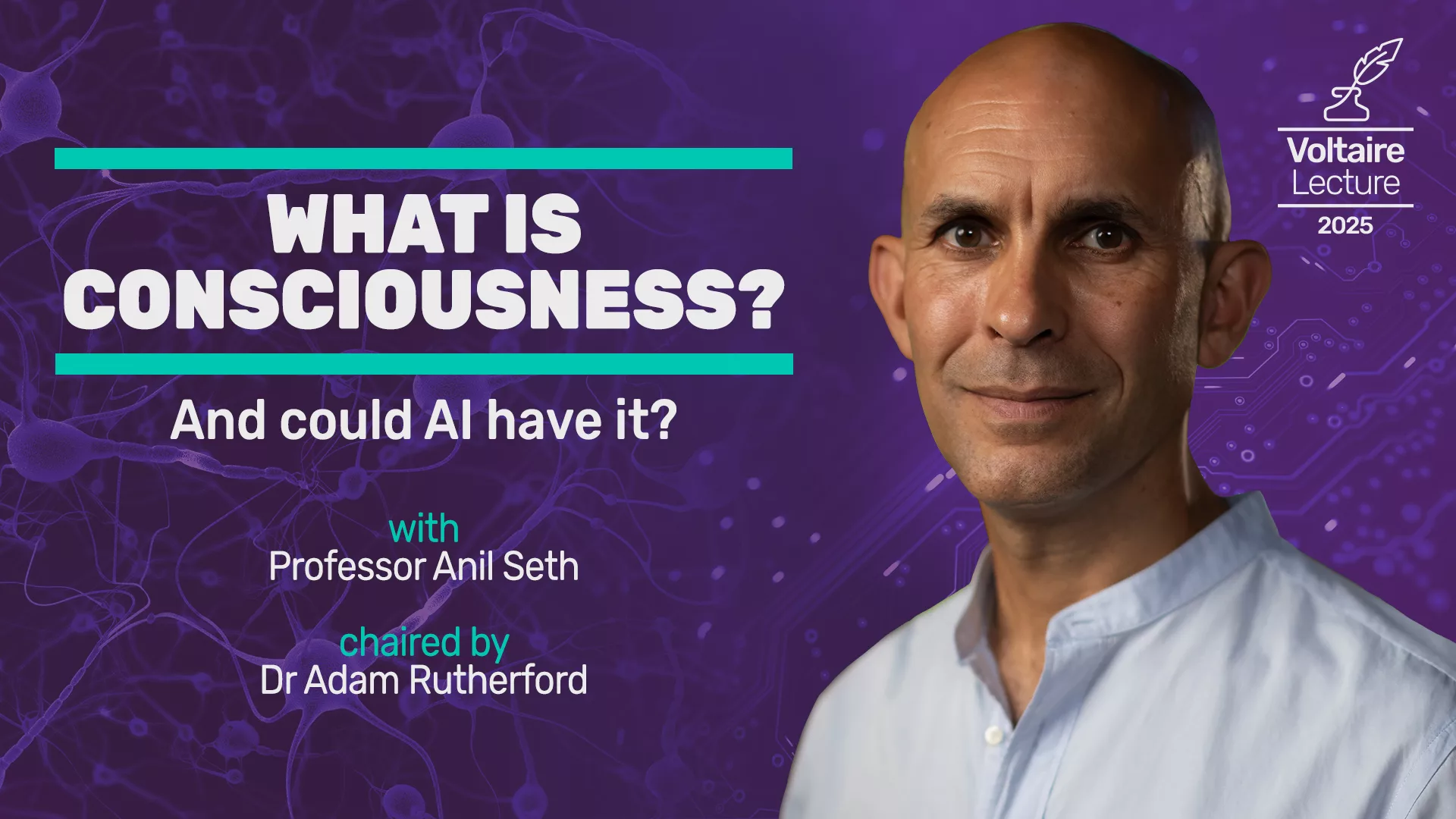

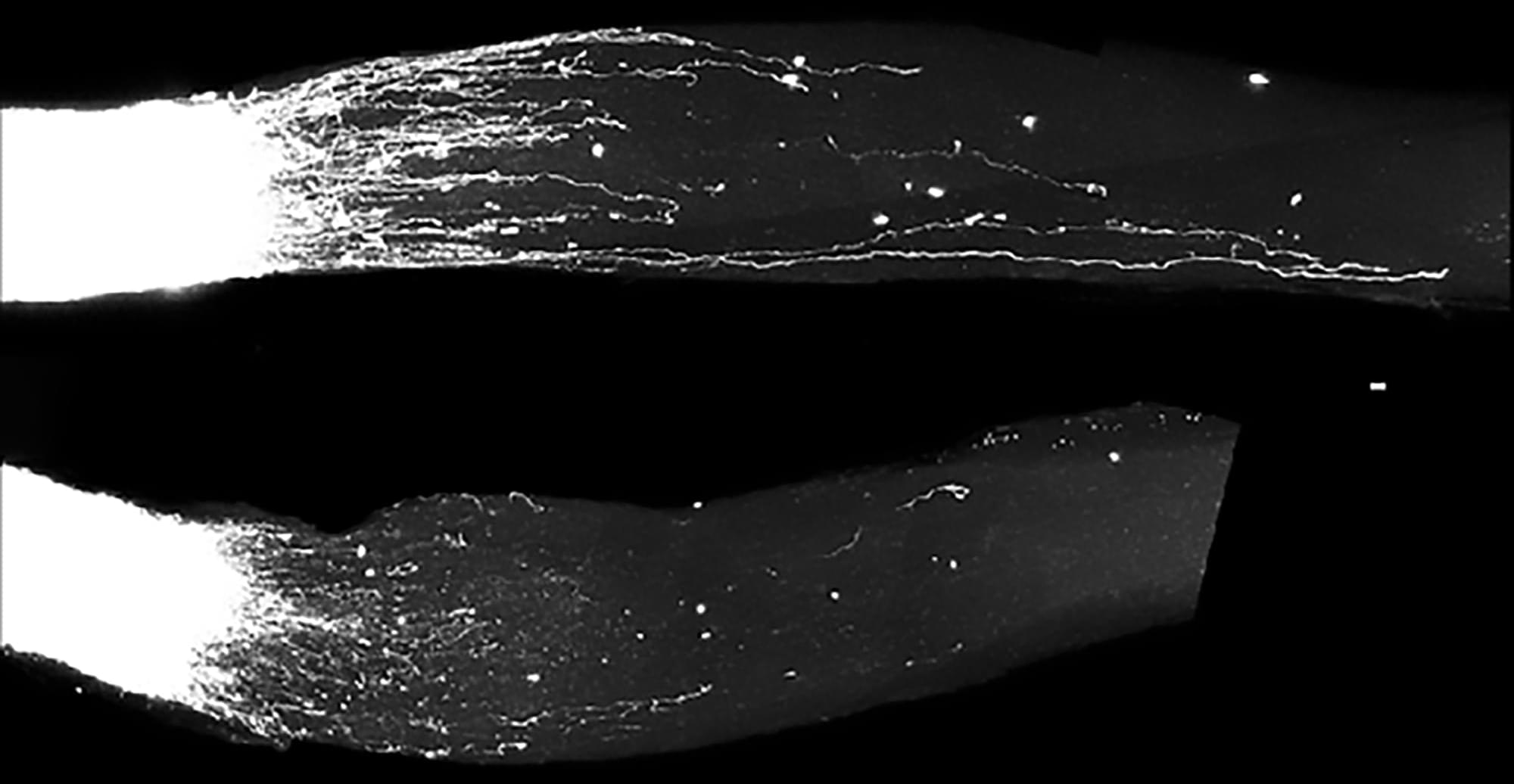

In the Voltaire Lecture 2025, Professor Anil Seth will set out an approach to understanding consciousness which, rather than trying to solve the mystery head-on, tries to dissolve it by building explanatory bridges from physics and biology to experience and function. In this view, conscious experiences of the world around us, and of being a ‘self’ within that world, can be understood in terms of perceptual predictions that are deeply rooted in a fundamental biological imperative – the desire to stay alive.

At this event, Professor Seth will explore how widely distributed beyond human beings consciousness may be, with a particular focus on AI. He will consider whether consciousness might depend not just on ‘information processing’, but on properties unique to living, biological organisms, before ending with an exploration of the ethical implications of an artificial intelligence that is either actually conscious – or can convincingly pretend to be.