Cells will ramp up gene expression in response to physical forces alone, a new study finds. Gene activation, the first step of protein production, starts less than one millisecond after a cell is stretched—hundreds of times faster than chemical signals can travel, the researchers report.

The scientists tested forces that are biologically relevant—equivalent to those exerted on human cells by breathing, exercising or vocalizing. They report their findings in the journal Science Advances.

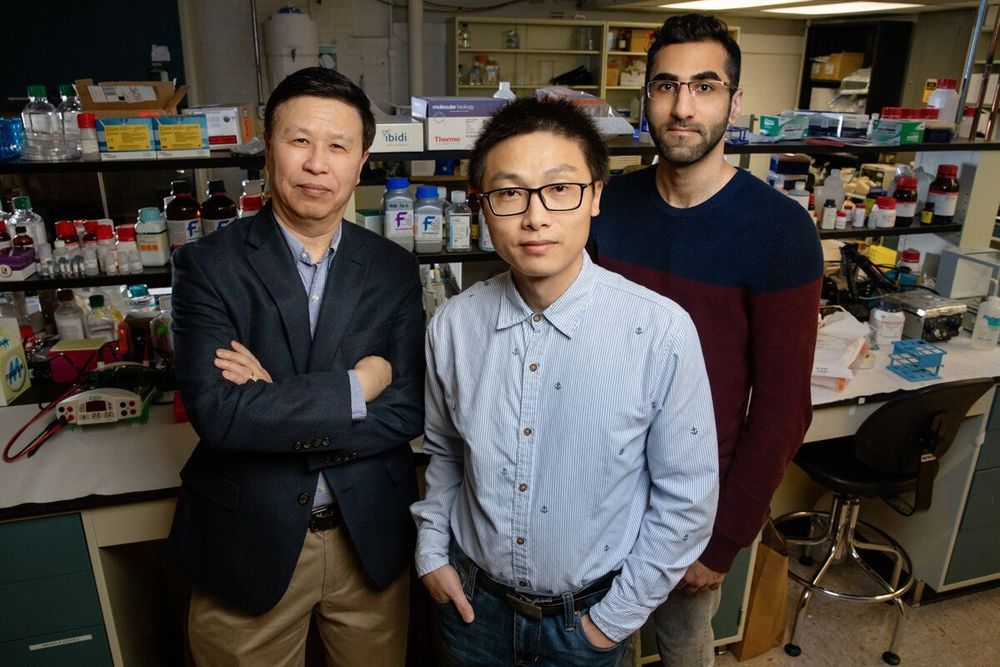

“We found that force can activate genes without intermediates, without enzymes or signaling molecules in the cytoplasm,” said University of Illinois mechanical science and engineering professor Ning Wang, who led the research. “We also discovered why some genes can be activated by force and some cannot.”

Now the Defense Advanced Research Projects Agency is jumping on the bandwagon with their new “Sonic Projector” program:

Now the Defense Advanced Research Projects Agency is jumping on the bandwagon with their new “Sonic Projector” program: