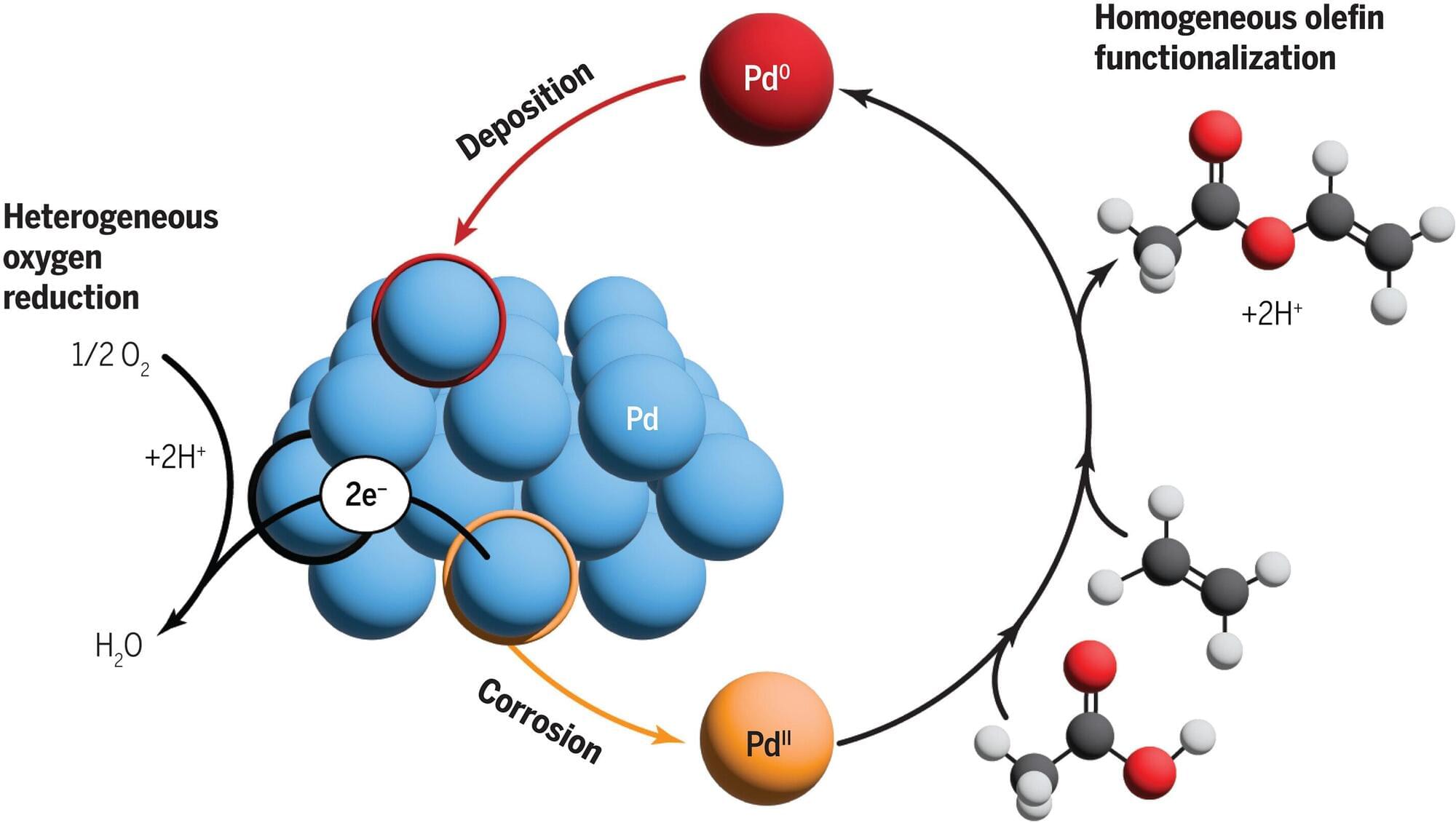

The process of catalysis—in which a material speeds up a chemical reaction—is crucial to the production of many of the chemicals used in our everyday lives. But even though these catalytic processes are widespread, researchers often lack a clear understanding of exactly how they work.

A new analysis by researchers at MIT has shown that an important industrial synthesis process, the production of vinyl acetate, requires a catalyst to take two different forms, which cycle back and forth from one to the other as the chemical process unfolds.

Previously, it had been thought that only one of the two forms was needed. The new findings are published today in the journal Science, in a paper by MIT graduate students Deiaa Harraz and Kunal Lodaya, Bryan Tang, Ph.D., and MIT professor of chemistry and chemical engineering Yogesh Surendranath.