The tech giant poached several top Google researchers to help build a powerful AI tool that can diagnose patients and potentially cut health care costs.

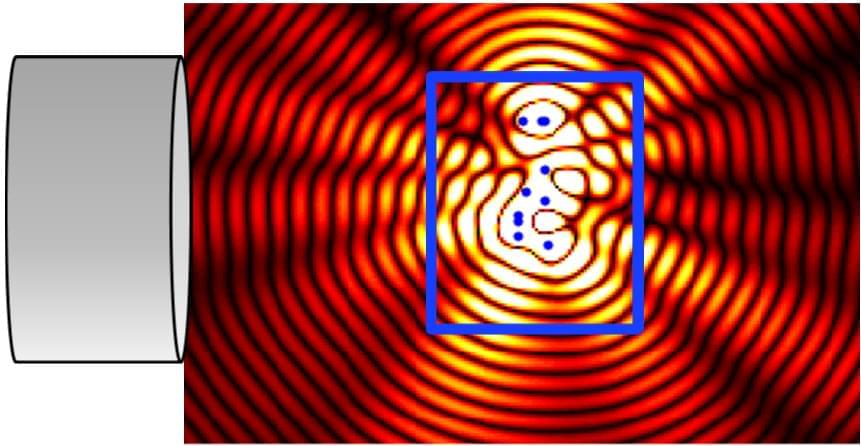

Researchers at Northeastern University have discovered how to change the electronic state of matter on demand, a breakthrough that could make electronics 1,000 times faster and more efficient.

By switching from insulating to conducting and vice versa, the discovery creates the potential to replace silicon components in electronics with exponentially smaller and faster quantum materials.

“Processors work in gigahertz right now,” said Alberto de la Torre, assistant professor of physics and lead author of the research. “The speed of change that this would enable would allow you to go to terahertz.”

In radiation therapy, precision can save lives. Oncologists must carefully map the size and location of a tumor before delivering high-dose radiation to destroy cancer cells while sparing healthy tissue. But this process, called tumor segmentation, is still done manually, takes time, varies between doctors—and can lead to critical tumor areas being overlooked.

Now, a team of Northwestern Medicine scientists has developed an AI tool called iSeg that not only matches doctors in accurately outlining lung tumors on CT scans but can also identify areas that some doctors may miss, reports a large new study.

Unlike earlier AI tools that focused on static images, iSeg is the first 3D deep learning tool shown to segment tumors as they move with each breath—a critical factor in planning radiation treatment, which half of all cancer patients in the U.S. receive during their illness.

Basically put, the researchers found that the more people use AIs, the more they tend to use the AIs’ favorite words in their own speech.

TechRadar’s Eric Hal Schwartz made a good point, though, that other examples of widely popular, technology-driven platforms have worked their way into commonly spoken English, such as saying “hashtag” before a word or phrase to hint at… well, almost anything.

Thank Twitter for that. When somebody would do so, it was almost always tongue-in-cheek and self aware. There was a nod to its own cringiness that meant the one saying it was in on the joke.

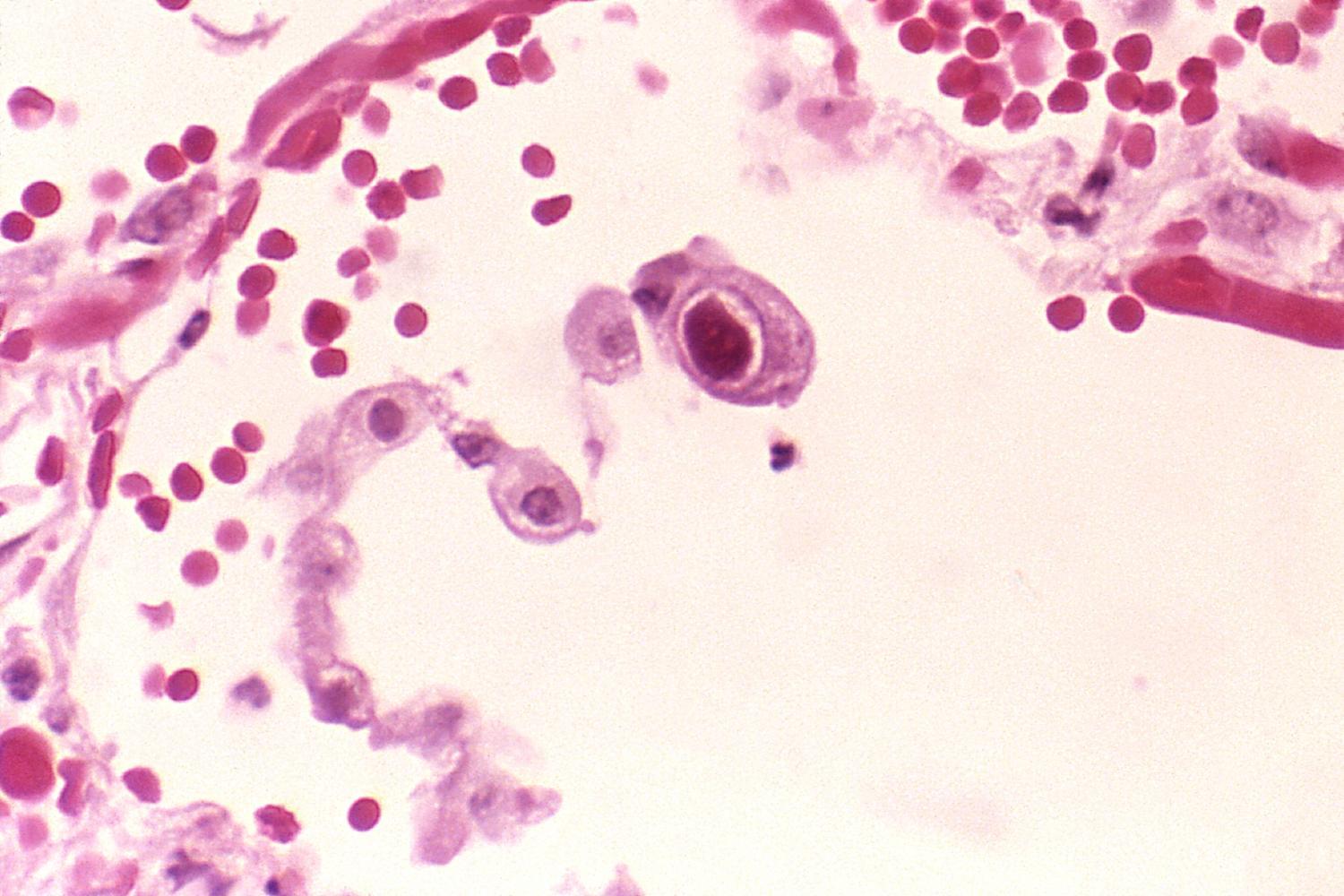

New research from the University of Pittsburgh School of Medicine and La Jolla Institute for Immunology, published today in Nature Microbiology, reveals an opportunity for developing a therapy against cytomegalovirus (CMV), the leading infectious cause of birth defects in the United States.

Researchers discovered a previously unappreciated mechanism by which CMV, a herpes virus that infects the majority of the world’s adult population, enters cells that line the blood vessels and contributes to vascular disease. In addition to using molecular machinery that is shared by all herpes viruses, CMV employs another molecular “key” that allows the virus to sneak through a side door and evade the body’s natural immune defenses.

The finding might explain why efforts to develop prophylactic treatments against CMV have, so far, been unsuccessful. This research also highlights a new potential avenue for the development of future antiviral drugs and suggests that other viruses of the herpes family, such as Epstein-Barr and chickenpox, could use similar molecular structures to spread from one infected cell to the next while avoiding immune detection.

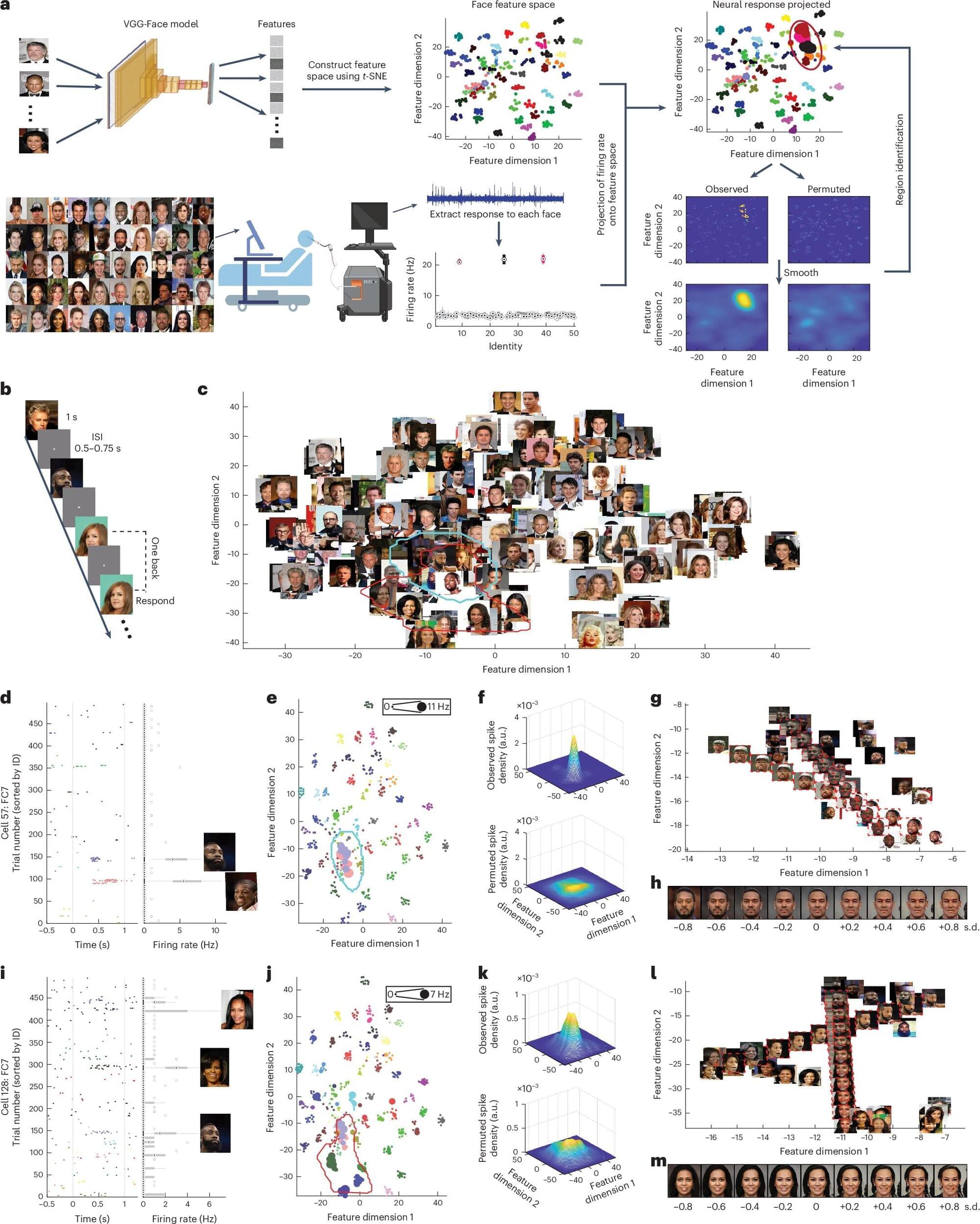

Humans are innately capable of recognizing other people they have seen before. This capability ultimately allows them to build meaningful social connections, develop their sense of identity, better cooperate with others, and identify individuals who could pose a risk to their safety.

Several past studies rooted in neuroscience, psychology and behavioral science have tried to shed light on the neural processes underlying the ability to encode other people’s identities. Most findings collected so far suggest that the identity of others is encoded by neurons in the amygdala and hippocampus, two brain regions known to support the processing of emotions and the encoding of memories, respectively.

Based on evidence collected in the past, researchers have concluded that neurons in these two brain regions respond in specific ways when we meet a person we are acquainted with, irrespective of visual features (i.e., how their face looks). A recent paper published in Nature Human Behaviour, however, suggests that this might not be the case, and that individual neurons in the amygdala encode and represent facial features, ultimately supporting the identification of others.