Mastering meat production in this way will lead to advances in medical science and treatment.

“Cultured meat also ultimately offers the opportunity to create meat products that are more well-defined, tunable, and potentially healthier than meat products today, which are constrained by the biological limitations of the domestic animals from which they are derived.”

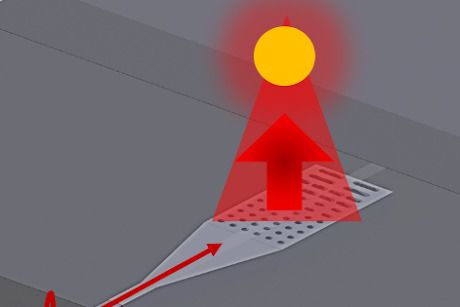

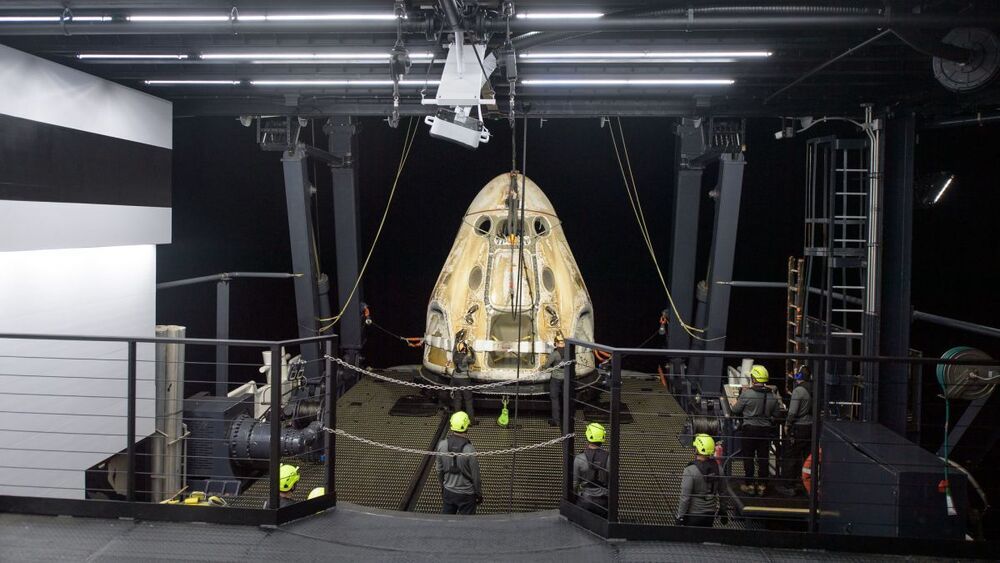

Owing to advances in industrial-scale cell culture process, the production of cultured meat has been largely standardized. Typically stem cells are first seeded into extracellular matrix scaffolds usually made of edible biomaterials like collagen and chitin. To support cellular metabolic activities, culture media containing nutrients like glucose and sera are next added to the bioreactor where continual mechanical motion facilitates good diffusion of nutrients and oxygen into and removal of metabolic waste products from the cells. After about 2–8 weeks, the cells grow into tissue layers and can be harvested and packaged.

Several key challenges remain in producing cultured meat including access to (proprietary) cell lines, high raw material cost, animal-source nutrients, and limited manufacturing scale. Despite this, immense progress has been made over the last decade. Here, we discuss the challenges and solutions to deliver cultured meat from a lab bench to a dining table.